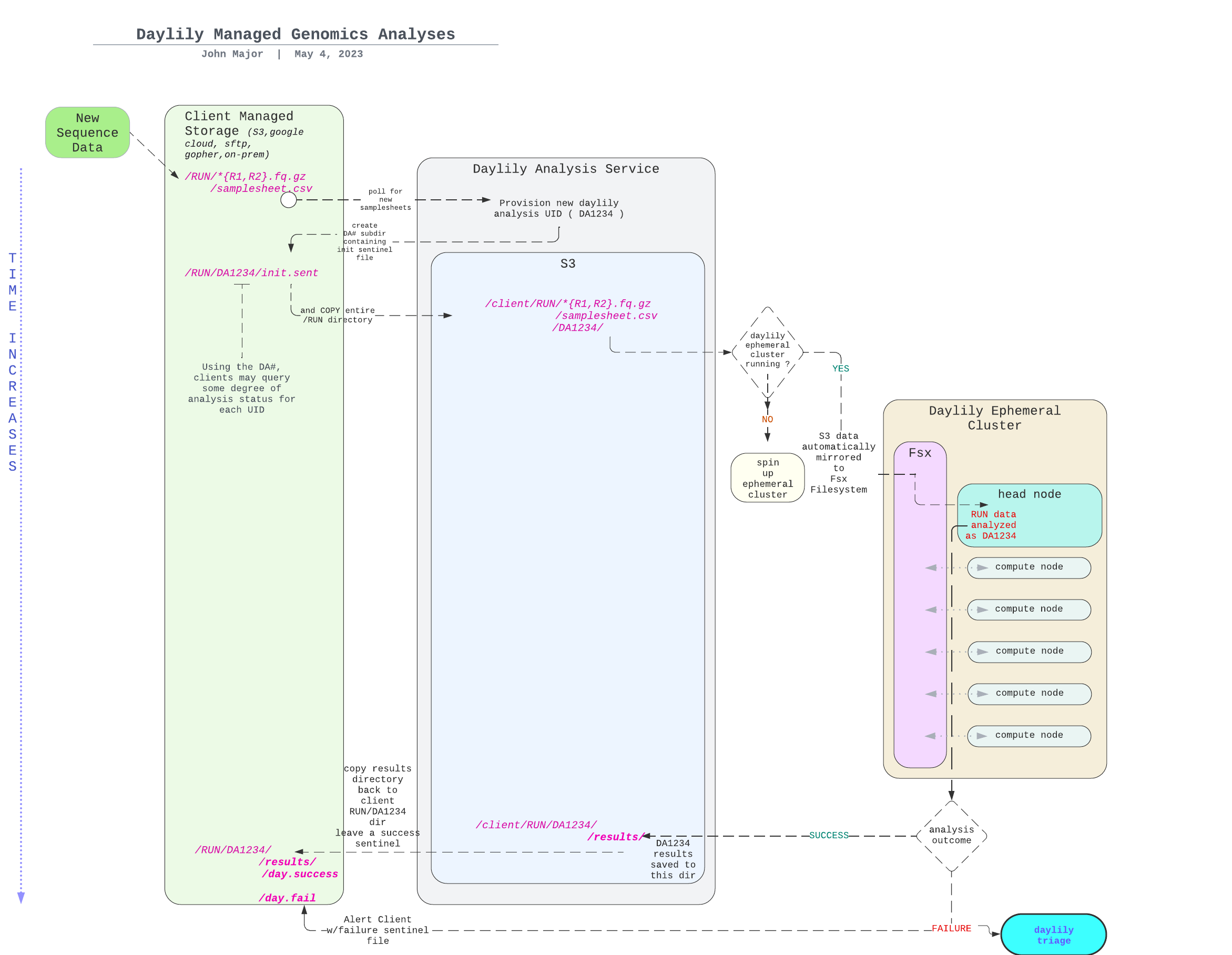

Infrastructure-as-code for spinning up ephemeral (transient) AWS ParallelCluster's that are tuned for bioinformatics and multi-omics analysis, but can run any slurm based or linux derived worflows. The project assembles the networking, storage, authentication, and head-node tooling required to launch, monitor, and tear down self-scaling Slurm clusters with predictable performance and cost transparency. Workflows themselves live in separate repositories, may be in any framework that supports slurm (snakemake, nextflow, cromwell, etc) and can be plugged in on demand and executed on your ephemeral resources.

bin/daylily-create-ephemeral-cluster --profile $AWS_PROFILE --region-az <region-az> --config daylily_cluster_config.yaml- Rapid, reproducible cluster bring-up built on AWS ParallelCluster with optional PCUI for browser-based terminal and multi-cluster management.

- Cost-aware infrastructure with scripts that inspect spot-market capacity, calculate per-sample spend, and tag workloads for downstream budget reporting. (using daylily-omics-analysis WGS analysis workflows, can run

fastq->aligned deduped CRAM->snv+sv VCFs in ~1hr for as little as $5/genome) - Shared FSx for Lustre file system that mirrors to S3 so hundreds or thousands of spot instances can safely collaborate on the same dataset while persisting results. Final results may then be automagically mirrored back to

s3. - Pluggable analysis catalog governed by YAML, enabling one-click cloning of approved pipelines or custom repositories for development work.

- Remote data staging & pipeline launch helpers that transform sample manifests, materialize canned control datasets, and kick off workflows from your workstation into tmux sessions on the head node.

- Curated & standardized reference data for

hg38andb37, and extensible to any arbitrary genome reference datasets via daylily-omics-references. - Bundled concordance data via

daylily-omics-referencesavailable to quickly and easily benchmark various pipleines. Datasets include ILMN, PacBio, ONT & Ultima. - Region-scoped references bucket tooling to hydrate FSx with curated reference bundles and optional licensed assets.

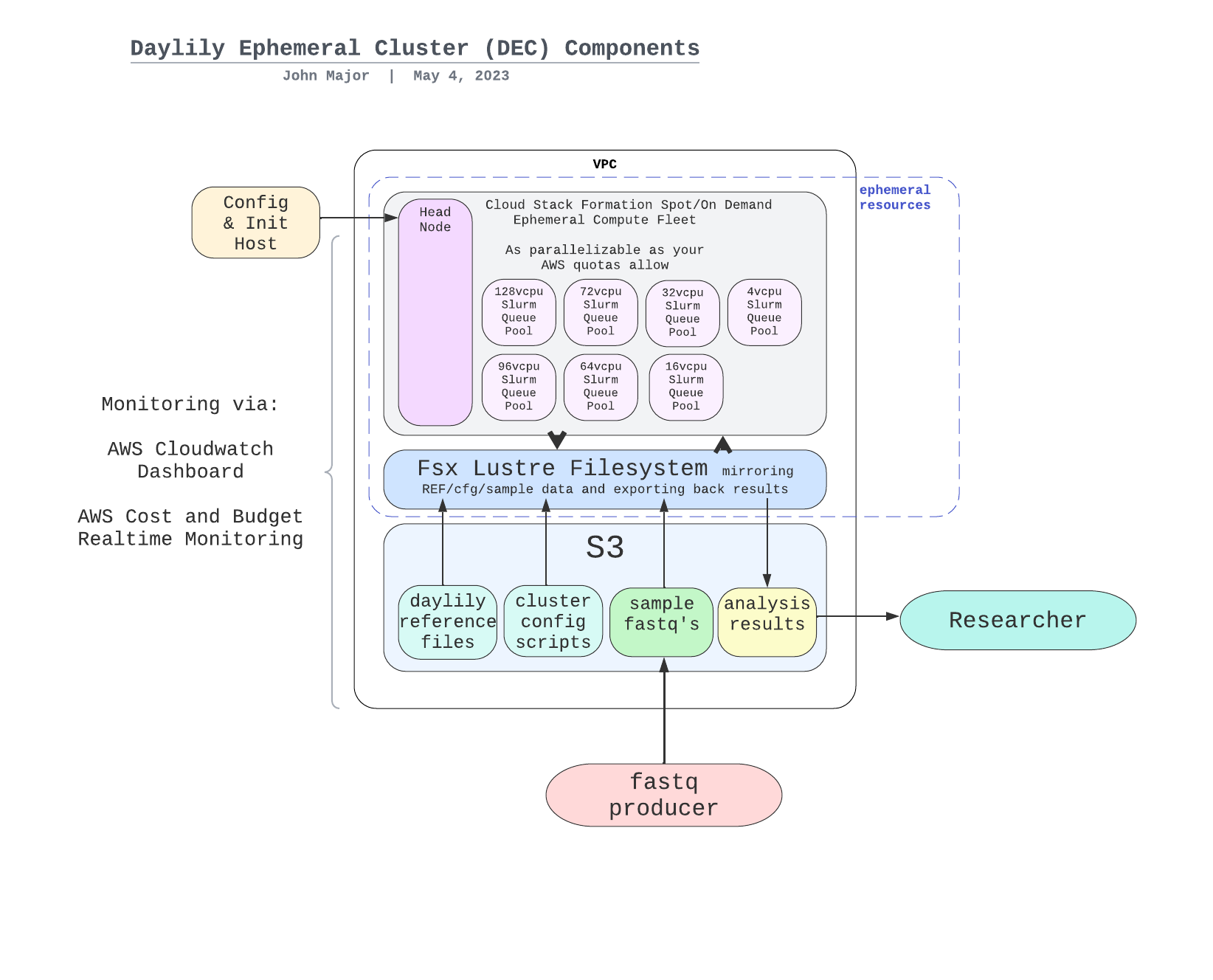

Daylily composes several AWS building blocks so you can stand up a full featured HPC environment quickly:

- AWS ParallelCluster AWS ParallelCluster (v3) is the core of the compute fabric, orchestrating spot and on-demand instances into Slurm partitions.

- FSx for Lustre mounted at

/fsxwith an S3 mirror so reference data, staged inputs, and pipeline outputs survive node turnover. - Automatic Load Scaling EC2 Instances with a mix of spot and on-demand instances, tuned for high-throughput genomics workloads.

- VPC, subnets, and security groups sized for high-throughput genomics compute.

- Daylily CLI installed on the head node to provide helpers for data staging, pipeline cloning, and remote execution.

- s3 references bucket with reference genomes, resource bundles, and canned control datasets for benchmarking and concordance.

- AWS CloudFormation templates to orchestrate the above components and manage lifecycle.

- AWS CloudWatch alarms to notify on spot instance interruptions and FSx storage capacity, dashboards to monitor CPU, memory, and network utilization.

- Parallel Cluster UI (PCUI) deployment to provide a web console for job management and interactive shells.

- Project level cost tagging & telemetry layered on top of AWS Budgets and cost allocation tags to preserve per-sample and per-project visibility. (experimental: budget enforcement via spot instance termination).

The Daylily head node ships with a registry of vetted repositories defined in config/daylily_available_repositories.yaml. Each entry specifies a friendly name, description, Git endpoints, default revision, and checkout location, allowing operators to:

- Clone approved pipelines (e.g., whole-genome, RNA-seq) in a single command using

day-cloneor standardgitfrom the head node. - Override the registry to test forks, feature branches, or entirely new repositories during development.

- Mix and match pipelines per project without rebuilding the cluster.

Because the compute layer is generic Slurm, any orchestrator that speaks Slurm (Snakemake, Nextflow, Cromwell, custom Bash, etc.) can be scheduled once the repository is present. Daylily’s helper bin/daylily-run-ephemeral-cluster-remote-tests demonstrates how to bootstrap a workflow remotely: it clones the configured repository at the requested tag, enters the Daylily environment, and launches a Snakemake target inside a tmux session, making it easy to kick off pipelines from your laptop.

High-throughput analyses rely on predictable reference data access. Daylily provides automation to:

- Clone reference bundles into a region-scoped S3 bucket (

<prefix>-daylily-omics-analysis-<region>) using./bin/create_daylily_omics_analysis_s3.sh, optionally layering in licensed assets such as Sentieon. - Automated mounting of the bucket via FSx so all compute nodes see

/fsx/data,/fsx/resources, and/fsx/analysis_resultswith low latency. - Stage canned control datasets when generating pipeline manifests:

bin/daylily-analysis-samples-to-manifest-new.pyaccepts concordance directories from S3/HTTP/local paths and copies them alongside samples, while tagging each run as positive or negative control for downstream QC.

Daylily’s workflow helpers bridge the gap between local manifests and remote execution:

- Use

bin/daylily-stage-analysis-samplesfrom your workstation to pick a cluster, upload ananalysis_samples.tsv, and invoke the head-node staging utility. The script downloads data from S3/HTTP/local paths, merges multi-lane FASTQs, and writes canonicalconfig/samples.tsvandconfig/units.tsvfiles to your chosen staging directory. - The staging utility automatically validates AWS credentials, materializes concordance/control payloads, normalizes metadata, and reports where to copy the generated configs.

- When you are ready to launch a workflow,

bin/daylily-run-ephemeral-cluster-remote-testscan log into the head node, clone the selected pipeline (as configured in the YAML registry), and start the run in a tmux session for detached execution.

These tools make it straightforward to stage data once, reuse it across pipelines, and keep critical control material co-located with sample inputs.

Daylily integrates with AWS Budgets and Cost Allocation Tags to provide per-sample and per-project cost visibility:

- AWS Budgets: Set up budgets for your projects and receive alerts when you approach your spending limits.

- Cost Allocation Tags: Use tags to categorize and track costs associated with specific samples, projects, or workflows.

- Budget Enforcement: Optionally enforce budgets by configuring spot instance termination when a budget threshold is reached, helping to prevent unexpected costs.

plugin process under development, please open an issue or PR to add your own repository

- Are controlled by the

config/daylily_available_repositories.yamlfile. You may add your own entries to this file, or override it with your own custom file when creating a cluster. To contribute a supported repository back to the mainline, please open a PR.

daylily-omics-analysis : comprehensive WGS analysis

sarek-nf-core port of sarek WGS pipeline daylily-sarek.

generic slurm via ubuntu

The AWS ParallelCluster created uses

slurmas the scheduler, so any slurm compatible workflow should work fine. See the AWS ParallelCluster docs for more info.

The AWS Parallel Cluster port of slurm has been slightly tweaked in the following ways (but otherwise is a standard slurm install):

- to manage spinning up and down spot instances.

- to track and enforce cost budgets by using the

--commentflag onsbatchcommand.

only useful if you have already installed daylily previously and have all of the AWS account configurations in place

as the admin user

From the Iam -> Users console, create a new user.

- Allow the user to access the AWS Management Console.

- Select

I want to create an IAM usernote: the insstructions which follow will probably not work if you create a user with theIdentity Centeroption. - Specify or autogenerate a p/w, note it down.

click next- Skip (for now) attaching a group / copying permissions / attaching policies, and

click next. - Review the confiirmation page, and click

Create user. - On the next page, capture the

Console sign-in URL,username, andpassword. You will need these to log in as thedaylily-serviceuser.

still as the admin user

- Navigate to the

IAM -> Usersconsole, click on thedaylily-serviceuser. - Click on the

Add permissionsbutton, then selectAdd permission,Attach policies directly. - Search for

AmazonQDeveloperAccess, select and add. - Search for

AmazonEC2SpotFleetAutoscaleRole, select and add. - Search for

AmazonEC2SpotFleetTaggingRole, select and add.

If this role is missing, you will get very challenging to debug failures for spot instances to launch, despite the cluster building and headnode running fine.

- Does it exist?

aws iam list-roles --query "Roles[?RoleName=='AWSServiceRoleForEC2Spot'].RoleName"if

[], then it does not exist.

- Create it if not:

aws iam create-service-linked-role --aws-service-name spot.amazonaws.com- Navigate to the

IAM -> Usersconsole, click on thedaylily-serviceuser. - Click on the

Add permissionsbutton, then selectcreate inline policy. - Click on the

JSONbubble button. - Delete the auto-populated json in the editor window, and paste this json into the editor (replace 3 instances of <AWS_ACCOUNT_ID> with your new account number, an integer found in the upper right dropdown).

click next- Name the policy

daylily-service-cluster-policy(not formally mandatory, but advised to bypass various warnings in future steps), then clickCreate policy.

There are a handful of quotas which will greatly limit (or block) your ability to create and run an ephemeral cluster. These quotas are set by AWS and you must request increases. The daylily-cfg=ephemeral-cluster script will check these quotas for you, and warn if it appears they are too low, but you should be aware of them and request increases proactively // these requests have no cost.

dedicated instances pre region quotas

Running Dedicated r7i Hosts>= 1 !!(AWS default is 0) !!Running On-Demand Standard (A, C, D, H, I, M, R, T, Z) instancesmust be >= 9 !!(AWS default is 5) !! just to run the headnode, and will need to be increased further for ANY other dedicated instances you (presently)/(will) run.

spot instances per region quotas

All Standard (A, C, D, H, I, M, R, T, Z) Spot Instance Requestsmust be >= 310 (and preferable >=2958) !!(AWS default is 5) !!

fsx lustre per region quotas

- should minimally allow creation of a FSX Lustre filesystem with >= 4.8 TB storage, which should be the default.

other quotas

May limit you as well, keep an eye on VPC & networking specifically.

The cost management built into daylily requires use of budgets and cost allocation tags. Someone with permissions to do so will need to activate these tags in the billing console. note: if no clusters have yet been created, these tags may not exist to be activeted until the first cluster is running. Activating these tags can happen at any time, it will not block progress on the installation of daylily if this is skipped for now. See AWS cost allocation tags

The tags to activate are:

aws-parallelcluster-jobid

aws-parallelcluster-username

aws-parallelcluster-project

aws-parallelcluster-clustername

aws-parallelcluster-enforce-budget

- The access necesary to view budgets is beyond the scope of this config, please work with your admin to set that up. If you are able to create clusters and whatnot, then the budgeting infrastructure should be working.

- Login to the AWS console as the

daylily-serviceuser using the console URL captured above.

as the daylily-service user

- Click your username in the upper right, select

Security credentials, scroll down toAccess keys, and clickCreate access key(many services will be displaying that they are not available, this is ok). - Choose 'Command Line Interface (CLI)', check

I understandand clickNext. - Do not tag the key, click

Next. IMPORTANT: Download the.csvfile, and store it in a safe place. You will not be able to download it again. This contains youraws_access_key_idandaws_secret_access_keywhich you will need to configure the AWS CLI. You may also copy this info from the confirmation page.

You will use the

aws_access_key_idandaws_secret_access_keyto configure the AWS CLI on your local machine in a future step.

as the daylily-service user

Must include

-omics-in the name!

key pairs are region specific, be sure you create a key pair in the region you intend to create an ephemeral cluster in

- Navigate to the

EC2 dashboard, in the left hand menu underNetwork & Security, click onKey Pairs. - CLick

Create Key Pair. - Give it a name, which must include the string

-omics-analysis-<region>. So, ie:username-omics-analysis-us-west-2. - Choose

Key pair typeofed25519. - Choose

.pemas the file format. - Click

Create key pair. - The

.pemfile will download, and please move it into your~/.sshdir and give it appropriate permissions. you may not download this file again, so be sure to store it in a safe place.

mkdir -p ~/.ssh

chmod 700 ~/.ssh

mv ~/Downloads/<yourkey>.pem ~/.ssh/<yourkey>.pem

chmod 400 ~/.ssh/<yourkey>.pemYou may run in any region or AZ you wish to try. This said, the majority of testing has been done in AZ's us-west-2c & us-west-2d (which have consistently been among the most cost effective & available spot markets for the instance types used in the daylily workflows).

Local machine development has been carried out exclusively on a mac using the zsh shell. bash should work as well (if you have issues with conda w/mac+bash, confirm that after miniconda install and conda init, the correct .bashrc and .bash_profile files were updated by conda init).

suggestion: run things in tmux or screen

Very good odds this will work on any mac and most Linux distros (ubuntu 22.04 are what the cluster nodes run). Windows, I can't say.

Install with brew or apt-get:

python3, tested with3.11.0git, tested with2.46.0wget, tested with1.25.0tmux(optional, but suggested)emacs(optional, I guess, but I'm not sure how to live without it)

./bin/check_prereq_sw.sh Create the aws cli files and directories manually.

mkdir ~/.aws

chmod 700 ~/.aws

touch ~/.aws/credentials

chmod 600 ~/.aws/credentials

touch ~/.aws/config

chmod 600 ~/.aws/configEdit ~/.aws/config, which should look like:

[default]

region = us-west-2

output = json

[daylily-service]

region = us-west-2

output = json

Edit ~/.aws/credentials, and add your deets, which should look like:

[default]

aws_access_key_id = <default-ACCESS_KEY>

aws_secret_access_key = <default-SECRET_ACCESS_KEY>

region = <REGION>

[daylily-service]

aws_access_key_id = <daylily-service-ACCESS_KEY>

aws_secret_access_key = <daylily-service-SECRET_ACCESS_KEY>

region = <REGION>- The

defaultprofile is used for general AWS CLI commands,daylily-servicecan be the same as default, best practice to not lean on default, but be explicit with the intended AWS_PROFILE used.

To automatically use a profile other than

default, set theAWS_PROFILEenvironment variable to the profile name you wish to use. ie:export AWS_PROFILE=daylily-service

git clone -b $(yq -r '.daylily.git_tag' "config/daylily/daylily_cli_global.yaml") https://github.com/Daylily-Informatics/daylily-ephemeral-cluster.git # or, if you have set ssh keys with github and intend to make changes: git clone git@github.com:Daylily-Informatics/daylily-ephemeral-cluster.git

cd daylily-ephemeral-clusterThis repo is cloned to your working environment, and cloned again each time a cluster is created and again for each analysis set executed on the cluster. The version is pinned to a tagged release so that all of these clones work from the same released version. This is accomplished by all clone operations pulling the release via:

echo "Pinned release: "$(yq -r '.daylily.git_tag' "config/daylily_cli_global.yaml")Further, when attemtping to activate an environment on an ephemeral cluster with dy-a, this will check to verify that the cluster deployment was created with the matching tag, and throw an error if a mismatch is detected. You can also find the tag logged in the cluster yaml created in ~/.config/daylily/<yourclustername>.yaml.

tested with conda version 24.11.1

Install with:

./bin/install_miniconda- open a new terminal/shell, and conda should be available:

conda -v.

from daylily root dir

./bin/init_dayec

conda activate DAY-EC

# CLI commands from ./bin are now on your PATH.

# Re-run this after pulling updates if needed.

# (init_dayec performs an editable pip install each run)

# DAY-EC should now be active... did it work?

colr 'did it work?' 0,100,255 255,100,0

daylily-references-public Reference Bucket

- The

daylily-references-publicbucket is preconfigured with all the necessary reference data to run the various pipelines, as well as including GIAB reads for automated concordance. - This bucket will need to be cloned to a new bucket with the name

<YOURPREFIX>-omics-analysis-<REGION>, one for each region you intend to run in. - These S3 buckets are tightly coupled to the

Fsx lustrefilesystems (which allows 1000s of concurrnet spot instances to read/write to the shared filesystem, making reference and data management both easier and highly performant). - Continue for more on this topic,,,.

- This will cost you ~$23 to clone w/in

us-west-2, up to $110 across regions. (one time, per region, cost) - The bust will cost ~$14.50/mo to keep hot in

us-west-2. It is not advised, but you may opt to remove unused reference data to reduce the monthly cost footprint by up to 65%. (monthly ongoing cost)

from your local machine, in the daylily git repo root

You may add/remove/update your copy of the refernces bucket as you find necessary.

YOURPREFIXwill be used as the bucket name prefix. Please keep it short. The new bucket name will beYOURPREFIX-omics-analysis-REGIONand created in the region you specify. You may name the buckets in other ways, but this will block you from using thedaylily-create-ephemeral-clusterscript, which is largely why you're here.- Cloning it will take 1 to many hours.

Use the following script

running in a tmux/screen session is advised as the copy may take 1-many hours

conda activate DAY-EC

# help

./bin/create_daylily_omics_analysis_s3.sh -h

export AWS_PROFILE=<your_profile>

BUCKET_PREFIX=<your_prefix>

REGION=us-west-2

# dryrun

./bin/create_daylily_omics_analysis_s3.sh --disable-warn --region $REGION --profile $AWS_PROFILE --bucket-prefix $BUCKET_PREFIX

# run for real

./bin/create_daylily_omics_analysis_s3.sh --disable-warn --region $REGION --profile $AWS_PROFILE --bucket-prefix $BUCKET_PREFIX --disable-dryrun

The helper script is a thin wrapper around the

daylily-omics-references

CLI (version 0.1.0). Activating the DAY-EC conda environment installs the

dependency automatically. You can also invoke the CLI directly once the

DAY-EC environment is active (the editable install makes it available from

any working directory):

daylily-omics-references --profile $AWS_PROFILE --region $REGION \

clone --bucket-prefix $BUCKET_PREFIX --version 0.7.131c --executeYou may visit the

S3console to confirm the bucket is being cloned as expected. The copy (if w/inus-west-2should take ~1hr, much longer across AZs.

from your local machine, in the daylily git repo root

You may choose any AZ to build and run an ephemeral cluster in (assuming resources both exist and can be requisitioned in the AZ). Run the following command to scan the spot markets in the AZ's you are interested in assessing (reference buckets do not need to exist in the regions you scan, but to ultimately run there, a reference bucket is required):

this command will take ~5min to complete, and much longer if you expand to all possible AZs, run with --help for all flags

conda activate DAY-EC

export AWS_PROFILE=daylily-service

REGION=us-west-2

OUT_TSV=./init_daylily_cluster.tsv

./bin/check_current_spot_market_by_zones.py -o $OUT_TSV --profile $AWS_PROFILE 30.0-cov genome @ vCPU-min per x align: 307.2 vCPU-min per x snvcall: 684.0 vCPU-min per x other: 0.021 vCPU-min per x svcall: 19.0

╒═══════════════════╤════════════╤═══════════╤═══════════╤═══════════╤════════════╤═══════════╤═══════════╤═══════════╤═══════════╤═══════════╤═══════════╤═══════════╤═══════════╤════════════╤════════════╕

│ Region AZ │ # │ Median │ Min │ Max │ Harmonic │ Spot │ FASTQ │ BAM │ CRAM │ snv │ snv │ sv │ Other │ $ per │ ~ EC2 $ │

│ │ Instance │ Spot $ │ Spot │ Spot │ Mean │ Stab- │ (GB) │ (GB) │ (GB) │ VCF │ gVCF │ VCF │ (GB) │ vCPU min │ │

│ │ Types │ │ $ │ $ │ Spot $ │ ility │ │ │ │ (GB) │ (GB) │ (GB) │ │ harmonic │ harmonic │

╞═══════════════════╪════════════╪═══════════╪═══════════╪═══════════╪════════════╪═══════════╪═══════════╪═══════════╪═══════════╪═══════════╪═══════════╪═══════════╪═══════════╪════════════╪════════════╡

│ 1. us-west-2a │ 6 │ 3.55125 │ 2.53540 │ 9.03330 │ 3.63529 │ 6.49790 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00032 │ 9.56365 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 2. us-west-2b │ 6 │ 2.69000 │ 0.93270 │ 7.96830 │ 2.06066 │ 7.03560 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00018 │ 5.42115 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 3. us-west-2c │ 6 │ 2.45480 │ 0.92230 │ 5.14490 │ 1.80816 │ 4.22260 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00016 │ 4.75687 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 4. us-west-2d │ 6 │ 1.74175 │ 0.92420 │ 4.54950 │ 1.71232 │ 3.62530 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00015 │ 4.50474 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 5. us-east-1a │ 6 │ 3.21395 │ 1.39280 │ 4.56180 │ 2.37483 │ 3.16900 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00021 │ 6.24766 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 6. us-east-1b │ 6 │ 3.06900 │ 1.01450 │ 6.97430 │ 2.48956 │ 5.95980 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00022 │ 6.54950 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 7. us-east-1c │ 6 │ 3.53250 │ 1.11530 │ 4.86300 │ 2.69623 │ 3.74770 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00023 │ 7.09320 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 8. us-east-1d │ 6 │ 2.07570 │ 0.92950 │ 6.82380 │ 1.79351 │ 5.89430 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00016 │ 4.71835 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 9. ap-south-1a │ 6 │ 1.78610 │ 1.01810 │ 3.51470 │ 1.63147 │ 2.49660 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00014 │ 4.29204 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 10. ap-south-1b │ 6 │ 1.29050 │ 1.00490 │ 2.24560 │ 1.37190 │ 1.24070 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00012 │ 3.60917 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 11. ap-south-1c │ 6 │ 1.26325 │ 0.86570 │ 1.42990 │ 1.18553 │ 0.56420 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00010 │ 3.11886 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 12. ap-south-1d │ 0 │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 13. eu-central-1a │ 6 │ 5.88980 │ 2.02420 │ 15.25590 │ 4.32093 │ 13.23170 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00038 │ 11.36744 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 14. eu-central-1b │ 6 │ 2.00245 │ 1.11620 │ 2.97580 │ 1.72476 │ 1.85960 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00015 │ 4.53746 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 15. eu-central-1c │ 6 │ 1.90570 │ 1.15920 │ 3.36620 │ 1.71591 │ 2.20700 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00015 │ 4.51419 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 16. ca-central-1a │ 6 │ 3.89545 │ 3.42250 │ 5.23380 │ 4.04001 │ 1.81130 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00035 │ 10.62839 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 17. ca-central-1b │ 6 │ 3.81865 │ 3.18960 │ 4.92650 │ 3.86332 │ 1.73690 │ 49.50000 │ 39.00000 │ 13.20000 │ 0.12000 │ 1.20000 │ 0.12000 │ 0.00300 │ 0.00034 │ 10.16355 │

├───────────────────┼────────────┼───────────┼───────────┼───────────┼────────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼───────────┼────────────┼────────────┤

│ 18. ca-central-1c │ 0 │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │ nan │

╘═══════════════════╧════════════╧═══════════╧═══════════╧═══════════╧════════════╧═══════════╧═══════════╧═══════════╧═══════════╧═══════════╧═══════════╧═══════════╧═══════════╧════════════╧════════════╛

Select the availability zone by number:

The script will go on to approximate the entire cost of analysis: EC2 costs, data transfer costs and storage cost. Both the active analysis cost, and also the approximate costs of storing analysis results per month.

from your local machine, in the daylily git repo root

Once you have selected an AZ && have a reference bucket ready in the region this AZ exists in, you are ready to proceed to creating an ephemeral cluster.

The following script will check a variety of required resources, attempt to create some if missing and then prompt you to select various options which will all be used to create a new parallel cluster yaml config, which in turn is used to create the cluster via StackFormation. The template yaml file can be checked out here.

export AWS_PROFILE=daylily-service

REGION_AZ=us-west-2c

./bin/daylily-create-ephemeral-cluster --region-az $REGION_AZ --profile $AWS_PROFILE

# And to bypass the non-critical warnings (which is fine, not all can be resolved )

./bin/daylily-create-ephemeral-cluster --region-az $REGION_AZ --profile $AWS_PROFILE --pass-on-warn # If you created an inline policy with a name other than daylily-service-cluster-policy, you will need to acknowledge the warning to proceed (assuming the policy permissions were granted other ways)

During the run the script invokes the daylily-omics-references CLI to

validate the selected reference bucket, preventing misconfigured buckets from

reaching the cluster provisioning phase.

Configuration for every prompt in bin/daylily-create-ephemeral-cluster lives in a user-managed YAML file. Copy the template

config/daylily_ephemeral_cluster_template.yaml to a writable location (for example config/daylily_ephemeral_cluster.yaml) and

set the environment variable DAY_EX_CFG to point at it:

cp config/daylily_ephemeral_cluster_template.yaml config/daylily_ephemeral_cluster.yaml

export DAY_EX_CFG=$PWD/config/daylily_ephemeral_cluster.yaml- Each key in the template corresponds to information collected by the script. Provide a concrete value to skip the prompt or

leave the value set to

PROMPTUSERto request the information interactively. - When the script encounters an invalid configured value (for example, an ARN that no longer exists), the error is logged and the prompt is shown so you can supply a working value on the fly.

- If a prompt queries AWS for options and only one choice is returned (key pair, S3 bucket, subnets, IAM policy, etc.), the script automatically selects that single option and notes the selection in the terminal.

- After a cluster is created, the script saves a timestamped snapshot of the resolved configuration (including any values you

entered interactively) in

~/.config/daylily/. Any invalid configuration values are recorded in the snapshot with an+ERRORsuffix so they can be fixed before the next run. - Remember to adjust the

max_count_*Isettings to match your available spot-instance quotas to avoid deadlocks during provisioning.

The gist of the flow of the script is as follows:

-

Your aws credentials will be auto-detected and used to query appropriate resources to select from to proceed. You will be prompted to:

-

(one per-region) select the full path to your $HOME/.ssh/.pem (from detected .pem files)

-

(one per-region) select the

s3bucket you created and seeded, options presented will be any with names ending in-omics-analysis. The script now verifies the bucket with thedaylily-omics-referencesCLI and will halt if required data are missing. Or you may select1and manually enter a s3 url. -

(one per-region-az) select the

Public Subnet IDcreated when the cloudstack formation script was run earlier. if none are detected, this will be auto-created for you via stack formation -

(one per-region-az) select the

Private Subnet IDcreated when the cloudstack formation script was run earlier.from the cloudformation stack output. if none are detected, this will be auto-created for you via stack formation -

(one per-aws-account) select the

Policy ARNcreated when the cloudstack formation script was run earlier. if none are detected, this will be auto-created for you via stack formation -

(one unique name per region)enter a name to asisgn your new ephemeral cluster (ie:

<myorg>-omics-analysis) -

(one per-aws account) You will be prompted to enter info to create a

daylily-globalbudget (allowed user-strings:daylily-service, alert email:your@email, budget amount:100) -

(one per unique cluster name) You will be prompted to enter info to create a

daylily-ephemeral-clusterbudget (allowed user-strings:daylily-service, alert email:your@email, budget amount:100) -

Enforce budgets? (default is no, yes is not fully tested)

-

Choose the cloudstack formation

yamltemplate (default isprod_cluster.yaml) -

Choose the FSx size (default is 4.8TB)

-

Opt to store detailed logs or not (default is no)

-

Choose if you wish to AUTO-DELETE the root EBS volumes on cluster termination (default is NO be sure to clean these up if you keep this as no)

-

Choose if you wish to RETAIN the FSx filesystem on cluster termination (default is YES be sure to clean these up if you keep this as yes)

The script will take all of the info entered and proceed to:

-

Run a process will run to poll and populate maximum spot prices for the instance types used in the cluster.

-

A

CLUSTERNAME_cluster.yamlandCLUSTERNAME_init_template_<timestamp>.yamlfile are created in~/.config/daylily/; the initialization template captures the collected values (replacing the legacy_cluster_init_vals.txt). -

First, a dryrun cluster creation is attempted. If successful, creation proceeds. If unsuccessful, the process will terminate.

-

The ephemeral cluster creation will begin and a monitoring script will watch for its completion. this can take from 20m to an hour to complete, depending on the region, size of Fsx requested, S3 size, etc. There is a max timeout set in the cluster config yaml of 1hr, which will cause a failure if the cluster is not up in that time.

The terminal will block, a status message will slowly scroll by, and after ~20m, if successful, the headnode config will begin (you may be prompted to select the cluster to config if there are multiple in the AZ. The headnode confiig will setup a few final bits, and then run a few tests (you should see a few magenta success bars during this process).

If all is well, you will see the following message:

You can now SSH into the head node with the following command:

ssh -i /Users/daylily/.ssh/omics-analysis-b.pem ubuntu@52.24.138.65

Once logged in, as the 'ubuntu' user, run the following commands:

cd ~/projects/daylily

source dyinit

source dyinit --project PROJECT

dy-a local hg38 # the other option being b37

export DAY_CONTAINERIZED=false # or true to use pre-built container of all analysis envs. false will create each conda env as needed

dy-r help

"Would you like to start building various caches needed to run jobs? [y/n]"

-

(optional), you may select

yornto begin building the cached environments on the cluster. The caches will be automatically created if missing whenever a job is submitted. They should only need to be created once per ephemeral cluster (the compute nodes all share the caches w/the headnode). The build can take 15-30m the first time. -

You are ready to roll.

During cluster creation, and especially if you need to debug a failure, please go to the

CloudFormationconsole and look at theCLUSTER-NAMEstack. TheEventstab will give you a good idea of what is happening, and theOutputstab will give you the IP of the headnode, and theResourcestab will give you the ARN of the FSx filesystem, which you can use to look at the FSx console to see the status of the filesystem creation.

./bin/daylily-run-ephemeral-cluster-remote-tests $pem_file $region $AWS_PROFILEA successful test will look like this:

You may confirm the cluster creation was successful with the following command (alternatively, use the PCUI console).

pcluster list-clusters --region $REGIONSee the instructions here to confirm the headnode is configured and ready to run the daylily pipeline.

Every resource created by daylily is tagged to allow in real time monitoring of costs, to whatever level of granularity you desire. This is intended as a tool for not only managing costs, but as a very important metric to track in assessing various tools utility moving ahead (are the costs of a tool worth the value of the data produced by it, and how does this tool compare with others in the same class?)

During setup of each ephemeral cluster, each cluster can be configured to enforce budgets. Meaning, job submission will be blocked if the budget specifiecd has been exceeded.

For default configuration, once running, the hourly cost will be ~ $1.68 (note: the cluster is intended to be up only when in use, not kept hot and inactive). The cost drivers are:

r7i.2xlargeon-demand headnode =$0.57 / hr.fsxfilesystem =$1.11 / hr(for 4.8TB, which is the default size for daylily. You do not pay by usage, but by size requested).- No other EC2 or storage (beyond the s3 storage used for the ref bucket and your sample data storage) costs are incurred.

There is the idle hourly costs, plus...

For v192 spots, the cost is generally $1 to $3 per hour (if you are discriminating in your AZ selection, the cost should be closer to $1/hr).

- You pay for spot instances as they spin up and until they are shut down (which all happens automatically). The max spot price per resource group limits the max costs (as does the max number of instances allowed per group, and your quotas).

There are no anticipated or observed costs in runnin the default daylily pipeline, as all data is already located in the same region as the cluster. The cost to reflect data from Fsx back to S3 is effectively $0.

Depending on your data management strategy, these costs will be zero or more than zero.

- You can use Fsx to bounce results back to the mounted S3 bucket, then move the results elsewhre, or move them from the cluster to another bucket (the former I know has no impact on performance, the latter might interfere with network latency?).

- You are paying for the fsx filesystem, which are represented in the idle cluster hourly cost. There are no costs beyond this for storage.

- HOWEVER, you are responsible for sizing the Fsx filesystem for your workload, and to be on top of moving data off of it as analysis completes. Fsx is not intended as a long term storage location, but is very much a high performance scratch space.

If its not being used, there is no cost incurred.

- The reference bucket will cost ~$14.50/mo to keep available in

us-west-2, and one will be needed in any AZ you intend to run in. - You should not store your sample or analysis data here long term.

- ONETIME per region reference bucket cloning costs $10-$27.

- I argue that it is unecessary to store

fastqfiles once bams are (properly) created, as the bam can reconstitute the fastq. So, the cost of storing fastqs beyond initial analysis, should be$0.00.

I suggest:

- CRAM (which is ~1/3 the size of BAM, costs correspondingly less in storage and transfer costs).

- gvcf.gz, which are bigger than vcf.gz, but contain more information, and are more useful for future analysis. note, bcf and vcf.gz sizes are effectively the same and do not justify the overhad of managing the additional format IMO.

Install instructions here, launch it using the public subnet created in your cluster, and the vpcID this public net belongs to. These go in the ImageBuilderVpcId and ImageBuilderSubnetId respectively.

You should be sure to enable SSM which allows remote access to the nodes from the PCUI console. https://docs.aws.amazon.com/systems-manager/latest/userguide/session-manager-getting-started-ssm-user-permissions.html

Use a preconfigured template in the region you have built a cluster in, they can be found here.

You will need to enter the following (all other params may be left as default):

Stack name: parallelcluster-uiAdmin's Email: your email ( a confirmation email will be sent to this address with the p/w for the UI, and can not be re-set if lost ).ImageBuilderVpcId: the vpcID of the public subnet created in your cluster creation, visit the VPC console and look for a name likedaylily-cs-<REGION-AZ>#AZ is digested to text, us-west-2d becomes us-west-twodImageBuilderSubnetId: the subnetID of the public subnet created in your cluster creation, visit the VPC console to find this (possibly navigate from the EC2 headnode to this).- Check the 2 acknowledgement boxes, and click

Create Stack.

This will boot you to cloudformation, and will take ~10m to complete. Once complete, you will receive an email with the password to the PCUI console.

To find the PCUI url, visit the Outputs tab of the parallelcluster-ui stack in the cloudformation console, the url for ParallelClusterUIUrl is the one you should use. You use the entered email and the password emailed to login the first time.

The PCUI stuff is not required, but very VERY awesome.

When you use the SSM web browser

shellvia PCUI, you will need to run the following command:sudo su - ubuntuto move to the user setup to run the various pipelines.

Go to the IAM Dashboard, and under roles, search for the role ParallelClusterUIUserRole-* and the role ParallelClusterLambdaRole-*. For each, add an in line json policy as follows (you will need to enumerate all reference buckets you wish to be able to edit with PCUI). Name it something like pcui-additional-s3-access. you may be restricted to only editing clusters w/in the same region the PCUI was started even with these changes

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::YOURBUCKETNAME-USWEST2",

"arn:aws:s3:::YOURBUCKETNAME-EUCENTRAL1",

"arn:aws:s3:::YOURBUCKETNAME-APSOUTH1"

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::YOURBUCKETNAME-USWEST2/*",

"arn:aws:s3:::YOURBUCKETNAME-EUCENTRAL1/*",

"arn:aws:s3:::YOURBUCKETNAME-APSOUTH1/*"

]

},

{

"Effect": "Allow",

"Action": [

"fsx:*"

],

"Resource": "*"

}

]

}Visit your url created when you built a PCUI

conda activate DAY-ECWARNING: you are advised to run aws configure set region <REGION> to set the region for use with the pcluster CLI, to avoid the errors you will cause when the --region flag is omitted.

pcluster -h

usage: pcluster [-h]

{list-clusters,create-cluster,delete-cluster,describe-cluster,update-cluster,describe-compute-fleet,update-compute-fleet,delete-cluster-instances,describe-cluster-instances,list-cluster-log-streams,get-cluster-log-events,get-cluster-stack-events,list-images,build-image,delete-image,describe-image,list-image-log-streams,get-image-log-events,get-image-stack-events,list-official-images,configure,dcv-connect,export-cluster-logs,export-image-logs,ssh,version}

...

pcluster is the AWS ParallelCluster CLI and permits launching and management of HPC clusters in the AWS cloud.

options:

-h, --help show this help message and exit

COMMANDS:

{list-clusters,create-cluster,delete-cluster,describe-cluster,update-cluster,describe-compute-fleet,update-compute-fleet,delete-cluster-instances,describe-cluster-instances,list-cluster-log-streams,get-cluster-log-events,get-cluster-stack-events,list-images,build-image,delete-image,describe-image,list-image-log-streams,get-image-log-events,get-image-stack-events,list-official-images,configure,dcv-connect,export-cluster-logs,export-image-logs,ssh,version}

list-clusters Retrieve the list of existing clusters.

create-cluster Create a managed cluster in a given region.

delete-cluster Initiate the deletion of a cluster.

describe-cluster Get detailed information about an existing cluster.

update-cluster Update a cluster managed in a given region.

describe-compute-fleet

Describe the status of the compute fleet.

update-compute-fleet

Update the status of the cluster compute fleet.

delete-cluster-instances

Initiate the forced termination of all cluster compute nodes. Does not work with AWS Batch clusters.

describe-cluster-instances

Describe the instances belonging to a given cluster.

list-cluster-log-streams

Retrieve the list of log streams associated with a cluster.

get-cluster-log-events

Retrieve the events associated with a log stream.

get-cluster-stack-events

Retrieve the events associated with the stack for a given cluster.

list-images Retrieve the list of existing custom images.

build-image Create a custom ParallelCluster image in a given region.

delete-image Initiate the deletion of the custom ParallelCluster image.

describe-image Get detailed information about an existing image.

list-image-log-streams

Retrieve the list of log streams associated with an image.

get-image-log-events

Retrieve the events associated with an image build.

get-image-stack-events

Retrieve the events associated with the stack for a given image build.

list-official-images

List Official ParallelCluster AMIs.

configure Start the AWS ParallelCluster configuration.

dcv-connect Permits to connect to the head node through an interactive session by using NICE DCV.

export-cluster-logs

Export the logs of the cluster to a local tar.gz archive by passing through an Amazon S3 Bucket.

export-image-logs Export the logs of the image builder stack to a local tar.gz archive by passing through an Amazon S3 Bucket.

ssh Connects to the head node instance using SSH.

version Displays the version of AWS ParallelCluster.

For command specific flags, please run: "pcluster [command] --help"

pcluster list-clusters --region us-west-2pcluster describe-cluster -n $cluster_name --region us-west-2ie: to get the public IP of the head node.

pcluster describe-cluster -n $cluster_name --region us-west-2 | grep 'publicIpAddress' | cut -d '"' -f 4From your local shell, you can ssh into the head node of the cluster using the following command.

ssh -i $pem_file ubuntu@$cluster_ip_address export AWS_PROFILE=<profile_name>

bin/daylily-ssh-into-headnode Is daylily CLI Available & Working

cd ~/projects/daylily-omics-analysis

. dyinit # inisitalizes the daylily cli

dy-a local hg38 # activates the local config using reference hg38, the other build available is b37

if

. dyinitworks, butdy-a localfails, trydy-b BUILD

This should produce a magenta WORKFLOW SUCCESS message and RETURN CODE: 0 at the end of the output. If so, you are set. If not, see the next section.

If there is no ~/projects/daylily directory, or the dyinit command is not found, the headnode configuration is incomplete.

Attempt To Complete Headnode Configuration From your remote terminal that you created the cluster with, run the following commands to complete the headnode configuration.

conda activate DAY-EC

./bin/daylily-cfg-headnode $PATH_TO_PEM $CLUSTER_AWS_REGION $AWS_PROFILE

# This script now installs Miniconda and initializes the DAY-EC environment on the

# headnode after cloning `daylily-ephemeral-cluster` to `~/projects/`. If you need

# to re-run that setup manually from the headnode, run:

# cd ~/projects/daylily-ephemeral-cluster

# ./bin/install_miniconda && ./bin/init_dayecIf the problem persists, ssh into the headnode, and attempt to run the commands as the ubuntu user which are being attempted by the

daylily-cfg-headnodescript.

Confirm /fsx/ directories are present

ls -lth /fsx/

total 130K

drwxrwxrwx 3 root root 33K Sep 26 09:22 environments

drwxr-xr-x 5 root root 33K Sep 26 08:58 data

drwxrwxrwx 5 root root 33K Sep 26 08:35 analysis_results

drwxrwxrwx 3 root root 33K Sep 26 08:35 resourcesThe day-clone script will be available to the ubuntu user on the headnode. This script wraps up the fetching and creating of various approved runnable workflows/pipelines.

day-clone --help

usage: day-clone [-h] [-d DESTINATION] [-t GIT_TAG] [-r GIT_REPO] [-c CLONE_ROOT]

[-u USER_NAME] [-w {https,ssh}] [--repository REPOSITORY] [--list]

Clone Daylily analysis repositories into the FSx analysis workspace.

options:

-h, --help show this help message and exit

-d, --destination DESTINATION

Name of the analysis workspace directory to create under the user-

specific root.

-t, --git-tag GIT_TAG

Git branch or tag to clone. Defaults to the repository's configured

default.

-r, --git-repo GIT_REPO

Override the git repository URL to clone. Overrides --repository.

-c, --clone-root CLONE_ROOT

Root directory where analysis workspaces are created. Defaults to the

configured analysis_root.

-u, --user-name USER_NAME

User directory to create within the clone root. Defaults to the

current user.

-w, --which-one {https,ssh}

Clone using https or ssh (default: https).

--repository REPOSITORY

Key of the repository defined in daylily_available_repositories.yaml

to clone.

--list List available repositories and exit.day-clone-d dayoa --repository daylily-omics-analysis -w https

cd /fsx/analysis_results/ubuntu/dayoa/daylily-omics-analysisOutput looks like:

Great success! Daylily repository cloned.

Repository: https://github.com/Daylily-Informatics/daylily-omics-analysis.git

Reference : 0.7.333

Location : /fsx/analysis_results/ubuntu/dayoa/daylily-omics-analysis

To get started:

cd /fsx/analysis_results/ubuntu/dayoa/daylily-omics-analysis

# initialize and run the analysis repository per its documentation

Please see the testing section of the daylily-omics-analysis README.

The sample staging helper that previously lived in daylily-omics-analysis now ships with this repository. Use it to turn an

analysis_samples.tsv file into staged FASTQs under /fsx/staged_sample_data and the Snakemake-style config tables (samples.tsv

and units.tsv) that the workflows consume. A template TSV is available at

etc/analysis_samples_template.tsv.

cd ~/projects/daylily-ephemeral-cluster

./bin/daylily-stage-analysis-samples-headnode /path/to/analysis_samples.tsv

# optionally override the stage target

./bin/daylily-stage-analysis-samples-headnode /path/to/analysis_samples.tsv /fsx/custom_dirThe helper defaults to /fsx/staged_sample_data and writes samples.tsv and units.tsv to that directory after staging.

./bin/daylily-stage-samples-from-local-to-headnode --profile <aws_profile> --region <aws_region> \

--pem ~/.ssh/<your-key>.pem --cluster <cluster-name> /path/to/analysis_samples.tsvWhen values such as the region, cluster name, or PEM file are omitted the script will prompt for them. The workflow is:

- Upload the TSV to the selected head node.

- Run

daylily-stage-analysis-samples-headnoderemotely so data are staged into/fsx/staged_sample_data/<timestamp>/. - Download

samples.tsvandunits.tsvback next to the local TSV (disable with--no-download).

This preserves the head node staging behaviour while allowing the process to be initiated during cluster provisioning.

After staging samples you can kick off the default daylily-omics-analysis workflow from the same machine that created the cluster:

./bin/daylily-run-omics-analysis-headnode --profile <aws_profile> --region <aws_region> \

--pem ~/.ssh/<your-key>.pem --cluster <cluster-name>The helper locates the most recent staging run (or a directory you specify with --stage-dir), clones the analysis repository via day-clone, copies the generated config/samples.tsv and config/units.tsv, and launches dy-r inside a tmux session on the head node. Attach with tmux attach -t daylily-omics-analysis after logging in via SSH to monitor progress. Use options such as --target, --jobs, or --dy-command to tailor the run.

Once jobs begin to be submitted, you can monitor from another shell on the headnode(or any compute node) with:

# The compute fleet, only nodes in state 'up' are running spots. 'idle' are defined pools of potential spots not bid on yet.

sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

i8* up infinite 12 idle~ i8-dy-gb64-[1-12]

i32 up infinite 24 idle~ i32-dy-gb64-[1-8],i32-dy-gb128-[1-8],i32-dy-gb256-[1-8]

i64 up infinite 16 idle~ i64-dy-gb256-[1-8],i64-dy-gb512-[1-8]

i96 up infinite 16 idle~ i96-dy-gb384-[1-8],i96-dy-gb768-[1-8]

i128 up infinite 28 idle~ i128-dy-gb256-[1-8],i128-dy-gb512-[1-10],i128-dy-gb1024-[1-10]

i192 up infinite 1 down# i192-dy-gb384-1

i192 up infinite 29 idle~ i192-dy-gb384-[2-10],i192-dy-gb768-[1-10],i192-dy-gb1536-[1-10]

# running jobs, usually reflecting all running node/spots as the spot teardown idle time is set to 5min default.

squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

1 i192 D-strobe ubuntu PD 0:00 1 (BeginTime)

# ST = PD is pending

# ST = CF is a spot has been instantiated and is being configured

# PD and CF sometimes toggle as the spot is configured and then begins running jobs.

squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

1 i192 D-strobe ubuntu R 5:09 1 i192-dy-gb384-1

# ST = R is running

# Also helpful

watch squeue

# also for the headnode

glancesYou can not access compute nodes directly, but can access them via the head node. From the head node, you can determine if there are running compute nodes with squeue, and use the node names to ssh into them.

ssh i192-dy-gb384-1warning: this will delete all resources created for the ephemeral cluster, importantly, including the fsx filesystem. You must export any analysis results created in /fsx/analysis_results from the fsx filesystem back to s3 before deleting the cluster.

- During cluster config, you will choose if Fsx and the EBS volumes auto-delete with cluster deletion. If you disable auto-deletion, these idle volumes can begin to cost a lot, so keep an eye on this if you opt for retaining on deletion.

Run:

./bin/daylily-export-fsx-to-s3 <cluster_name> <region> <export_path:analysis_results>- export_path should be

analysis_resultsor a subdirectory ofanalysis_results/*to export successfully. - The script will run, and report status until complete. If interrupted, the export will not be halted.

- You can visit the FSX console, and go to the Fsx filesystem details page to monitor the export status in the data repository tab.

- Go to the 'fsx' AWS console and select the filesystem for your cluster.

- Under the

Data Repositoriestab, select thefsxfilesystem and clickExport to S3. Export can only currently be carried out back to the same s3 which was mounted to the fsx filesystem. - Specify the export path as

analysis_results(or be more specific to ananalysis_results/subdir), the path you enter is named relative to the mountpoint of the fsx filesystem on the cluster head and compute nodes, which is/fsx/. Start the export. This can take 10+ min. When complete, confirm the data is now visible in the s3 bucket which was exported to. Once you confirm the export was successful, you can delete the cluster (which will delete the fsx filesystem).

Deleting the cluster will delete all resources created for the ephemeral cluster, including the fsx filesystem if not explicitly set to be saved during creation. You must export any analysis results created in /fsx/analysis_results from the fsx filesystem back to s3 before deleting the cluster.

One exception when deleting the ephemeral cluster is the cloudwatch logs created for the cluster will persist after deletion for the number of days specified in the pcluster config. You may delete these manually if you wish to do so via the cloudwatch log group dashboard.

This helper script will guide you through deleting the cluster, and will confirm you have exported data from the fsx filesystem before proceeding.

AWS_PROFILE=<daylily-service-profile>./bin/daylily-delete-ephemeral-cluster # then enter the region and cluster namenote: this will not modify/delete the s3 bucket mounted to the fsx filesystem, nor will it delete the policyARN, or private/public subnets used to config the ephemeral cluster.

the headnode /root volume and the fsx filesystem will be deleted if not explicitly flagged to be saved -- be sure you have exported Fsx->S3 before deleting the cluster

pcluster delete-cluster-instances -n <cluster-name> --region us-west-2

pcluster delete-cluster -n <cluster-name> --region us-west-2- You can monitor the status of the cluster deletion using

pcluster list-clusters --region us-west-2and/orpcluster describe-cluster -n <cluster-name> --region us-west-2. Deletion can take ~10min depending on the complexity of resources created and fsx filesystem size.

- Navigate to the

Clusterstab of the PCUI console. - Select the cluster you wish to delete, and click the

Deletebutton.

... For real, use it!

(also, can be done via pcui)

bin/daylily-ssh-into-headnode

alias it for your shell: alias goday="source ~/git_repos/daylily-ephemeral-cluster/bin/daylily-ssh-into-headnode"

- The AWS Cloudwatch console can be used to monitor the cluster, and the resources it is using. This is a good place to monitor the health of the cluster, and in particular the slurm and pcluster logs for the headnode and compute fleet.

- Navigate to your

cloudwatchconsole, then selectdashboardsand there will be a dashboard named for the name you used for the cluster. Follow this link (be sure you are in theus-west-2region) to see the logs and metrics for the cluster. - Reports are not automaticaly created for spot instances, but you may extend this base report as you like. This dashboard is automatically created by

pclusterfor each new cluster you create (and will be deleted when the cluster is deleted).

Daylily relies on a variety of pre-built reference data and resources to run. These are stored in the daylily-references-public bucket. You will need to clone this bucket to a new bucket in your account, once per region you intend to operate in.

This is a design choice based on leveraging the

FSXfilesystem to mount the data to the cluster nodes. Reference data in this S3 bucket are auto-mounted an available to the head and all compute nodes (Fsx supports 10's of thousands of concurrent connections), further, as analysis completes on the cluster, you can choose to reflect data back to this bucket (and then stage elsewhere). Having these references pre-arranged aids in reproducibility and allows for the cluster to be spun up and down with negligible time required to move / create refernce data.

BONUS: the 7 giab google brain 30x ILMN read sets are included with the bucket to standardize benchmarking and concordance testing.

You may add / edit (not advised) / remove data (say, if you never need one of the builds, or don't wish to use the GIAB reads) to suit your needs.

Onetime cost of between ~$27 to ~$108 per region to create bucket.

monthly S3 standard cost of ~$14/month to continue hosting it.

- Size: 617.2GB, and contains 599 files.

- Source bucket region:

us-west-2 - Cost to store S3 (standard: $14.20/month, IA: $7.72/month, Glacier: $2.47 to $0.61/month)

- Data transfer costs to clone source bucket

- within us-west-2: ~$3.40

- to other regions: ~$58.00

- Accelerated transfer is used for the largest files, and adds ~$24.00 w/in

us-west-2and ~$50 across regions. - Cloning w/in

us-west-2will take ~2hr, and to other regions ~7hrs. - Moving data between this bucket and the FSX filesystem and back is not charged by size, but by number of objects, at a cost of

$0.005 per 1,000 PUT. The cost to move 599 objecsts back and forth once to Fsx is$0.0025(you do pay for Fsx when it is running, which is only when you choose to run analysus).

- Your new bucket name needs to end in

-omics-analysis-REGIONand be unique to your account. - One bucket must be created per

REGIONyou intend to run in. - The reference data version is currently

0.7, and will be replicated correctly using the script below. - The total size of the bucket will be 779.1GB, and the cost of standard S3 storage will be ~$30/mo.

- Copying the daylily-references-public bucket will take ~7hrs using the script below.

hg38andb37reference data files (including supporting tool specific files).- 7 google-brain ~

30xIllunina 2x150fastq.gzfiles for all 7 GIAB samples (HG001,HG002,HG003,HG004,HG005,HG006,HG007). - snv and sv truth sets (

v4.2.1) for all 7 GIAB samples in bothb37andhg38. - A handful of pre-built conda environments and docker images (for demonstration purposes, you may choose to add to your own instance of this bucket to save on re-building envs on new eclusters).

- A handful of scripts and config necessary for the ephemeral cluster to run.

note: you can choose to eliminate the data for b37 or hg38 to save on storage costs. In addition, you may choose to eliminate the GIAB fastq files if you do not intend to run concordance or benchmarking tests (which is advised against as this framework was developed explicitly to facilitate these types of comparisons in an ongoing way).

Are region specific, and may only intereact with S3 buckets in the same region as the filesystem. There are region specific quotas to be aware of.

- Fsx filesystems are extraordinarily fast, massively scallable (both in IO operations as well as number of connections supported -- you will be hard pressed to stress this thing out until you have 10s of thousands of concurrent connected instances). It is also a pay-to-play product, and is only cost effective to run while in active use.

- Daylily uses a

scratchtype instance, which auto-mounts the region specifics3://PREFIX-omics-analysis-REGION/datadirectory to the fsx filesystem as/fsx/data./fsxis available to the head node and all compute nodes. - When you delete a cluster, the attached

Fsx Lustrefilesystem will be deleted as well. -

BE SURE YOU REFLECT ANALYSIS REUSLTS BACK TO S3 BEFORE DELETING YOUR EPHEMERAL CLUSTER ... do this via the Fsx dashboard and create a data export task to the same s3 bucket you used to seed the fsx filesystem ( you will probably wish to define exporting

analysis_results, which will export back tos3://PREFIX-omics-analysis-REGION/FSX-export-DATETIME/everything in/fsx/analysis_resultsto this new FSX-export directory. do not export the entire/fsxmount, this is not tested and might try to duplicate your reference data as well! ). This can take 10+ min to complete, and you can monitor the progress in the fsx dashboard & delete your cluster once the export is complete. - Fsx can only mount one s3 bucket at a time, the analysis_results data moved back to S3 via the export should be moved again to a final destination (w/in the same region ideally) for longer term storage.

- All of this handling of data is amendable to being automated, and if someone would like to add a cluster delete check which blocks deletion if there is unexported data still on /fsx, that would be awesome.

- Further, you may write to any path in

/fsxfrom any instance it is mounted to, except/fsx/datawhich is read only and will only update if data mounted from thes3://PREFIX-omics-analysis-REGION/datais added/deleted/updated (not advised).

The following directories are created and accessible via /fsx on the headnode and compute nodes.

/fsx/

├── analysis_results

│ ├── cromwell_executions ## in development

│ ├── daylily ## deprecated

│ └── ubuntu ## <<<< run all analyses here <<<<

├── data ## mounted to the s3 bucket PREFIX-omics-analysis-REGION/data

│ ├── cached_envs

│ ├── genomic_data

│ └── tool_specific_resources

├── miners ## experimental & disabled by default

├── resources

│ └── environments ## location of cached conda envs and docker images. so they are only created/pulled once per cluster lifetime.

├── scratch ## scratch space for high IO tools

└── tmp ## tmp used by slurm by default

- Rough draft script is running already, with best guesses for things like compute time per-x coverage, etc.

- A branch has been started for this work, which is reasonably straightforward. Tasks include:

- The AWS parallel cluster slurm snakemake executor, pcluster-slurm is written, but needs some additional features and to be tested at scale.

- ✅ Migrated from the legacy

analysis_manifest.csvto the Snakemakev8.*config/samples/unitsformat (which is much cleaner than the original manifest). - The actual workflow files should need very little tweaking.

- Running Cromwell WDL's is in early stages, and preliminary & still lightly documented work can be found here ( using the https://github.com/wustl-oncology as starting workflows ).

Before getting into the cool informatics business going on, there is a boatload of complex ops systems running to manage EC2 spot instances, navigate spot markets, as well as mechanisms to monitor and observe all aspects of this framework. AWS ParallelCluster is the glue holding everything together, and deserves special thanks.

The system is designed to be robust, secure, auditable, and should only take a matter of days to stand up. Please contact me for further details.

The DAG For 1 Sample Running Through The BWA-MEM2ert+Doppelmark+Deepvariant+Manta+TIDDIT+Dysgu+Svaba+QCforDays Pipeline

NOTE: each node in the below DAG is run as a self-contained job. Each job/n ode/rule is distributed to a suitable EC2 spot(or on demand if you prefer) instance to run. Each node is a packaged/containerized unit of work. This dag represents jobs running across sometimes thousands of instances at a time. Slurm and Snakemake manage all of the scaling, teardown, scheduling, recovery and general orchestration: cherry on top: killer observability & per project resource cost reporting and budget controls!

-

The above is actually a compressed view of the jobs managed for a sample moving through this pipeline. This view is of the dag which properly reflects parallelized jobs.

example from the daylily-omics-analysis repo

The batch is comprised of Novaseq 30x HG002 fastqs, and again downsampling to: 25,20,15,10,5x.

Example report.

- A visualization of just the directories (minus log dirs) created by daylily b37 shown, hg38 is supported as well

- [with files](docs/ops/tree_full.md

Reported faceted by: SNPts, SNPtv, INS>0-<51, DEL>0-51, Indel>0-<51. Generated when the correct info is set in the config

samples.tsv/units.tsvfiles.

Picture and list of tools

Daylily uses Semantic Versioning. For the versions available, see the tags on this repository.

If the S3 bucket mounted to the FSX filesystem is too large (the default bucket is close to too large), this can cause Fsx to fail to create in time for pcluster, and pcluster time out fails. The wait time for pcluster is configured to be much longer than default, but this can still be a difficult to identify reason for cluster creation failure. Probability for failure increases with S3 bucket size, and also if the imported directories are being changed during pcluster creation. Try again, try with a longer timeount, and try with a smaller bucket (ie: remove one of the human reference build data sets, or move to a different location in the bucket not imported by Fsx)

The command bin/init_cloudstackformation.sh ./config/day_cluster/pcluster_env.yml "$res_prefix" "$region_az" "$region" $AWS_PROFILE does not yet gracefully handle being run >1x per region. The yaml can be edited to create the correct scoped resources for running in >1 AZ in a region (this all works fine when running in 1AZ in >1 regions), or you can manually create the pub/private subnets, etc for running in multiple AZs in a region. The fix is not difficult, but is not yet automated.

All tools involved in daylily-ephemeral-cluster can be managed in such a way to satisfy various clinical comlicance requirements. This is largely in your hands. AWS Parallel Cluster is as secure or insecure as you set it up to be. https://docs.aws.amazon.com/parallelcluster/v2/ug/security-compliance-validation.html. The main point here is it can in my experience be managed in compliance heavy settings, no problem.

Assuming you have created a cluster with ``bin/daylily-create-ephemeral-cluster --profile $AWS_PROFILE --region-az , it will leave 3 artifact files in ~/.config/daylily/`:

<cluster_name>_cli_cfg_<datetime>.yaml# used by daylily-create-ephemeral-cluster script<cluster_name>_template.yaml<cluster_name>_cluster.yaml# used by pcluster and PCUI

Using <cluster_name>_cluster.yaml

You should edit the cluster name in the config file to be unique before re-using it.

bin/daylily-create-ephemeral-cluster --profile $AWS_PROFILE --region-az <region-az> --config ~/.config/daylily/<cluster_name>_cli_cfg_<datetime>.yaml!!! This will not create budgets or sns monitoring....

pcuster create-cluster -n <cluster_name> --region <region> --cluster-template ~/.config/daylily/<cluster_name>_cluster.yaml- Navigate to the

PCUIconsole, select the region you wish to operate in. Choose 'create new cluster' and choose from a running or stopped cluster.

- Navigate to the

PCUIconsole, select the region you wish to operate in. Choose 'create new cluster' and choose 'from cluster config file', and upload the<cluster_name>_cluster.yamlfile.

To create a terminal report of all currently tagged AWS resources with cluster name.

export AWS_PROFILE=YOURADMINUSERPROFILE

aws iam create-policy \\n --policy-name DaylilyCostRead \\n --policy-document file://config/aws/generate_cluster_report.json

export AWS_PROFILE=<daylily-service>export AWS_PROFILE=YOURADMINUSERPROFILE

aws iam attach-user-policy \\n --user-name daylily-service \\n --policy-arn arn:aws:iam::108782052779:policy/DaylilyCostRead

export AWS_PROFILE=<daylily-service>- Must be run from your local machine used to create clusters.

- Copies files from local paths or accessible s3 buckets to the headnode, and stages them into

/fsx/data/staged_sample_data/<timestamp>/. Generatessamples.tsvandunits.tsvin the staging directory. - These files will appear in your S3 bucket as well under /data/staged_sample_data//.

- ... meaning they will appear in any cluster mounting this reference bucket.

- The directory with these files should be moved to a non mounted dir in the bucket when not acively being used.

- NOTE This script does not concatenate lane fastqs like the headnode version of this script.

bin/daylily-stage-samples-from-local-to-headnode --region us-west-2 --profile daylily-service --debug --reference-bucket s3://daylily-dayoa-omics-analysis-us-west-2 etc/analysis_samples_template.tsv # replace <BUCKET> with your bucket name- Must be run from the headnode of the cluster.

- Requires that you run

aws configure --profile <aws_profile>first to set up aws credentials on the headnode. - Copies files from local paths or accessible s3 buckets to the headnode, and stages them into

/fsx/staged_sample_data/<timestamp>/. Generatessamples.tsvandunits.tsvin the staging directory. - Lane fastqs are concatenated into combined fastqs if desired.

- These files are in the /fsx scratch space and will not be saved once the cluster is deleted, be sure to export them back to s3 if you wish to retain them.

bin/daylily-stage-samples-from-headnode --region us-west-2 --profile daylily-service --debug --reference-bucket daylily-dayoa-omics-analysis-us-west-2 etc/analysis_samples_template.tsv # replace <BUCKET> with your bucket nameCosts can be delayed by up to 24hrs from AWS.

Navigate to the Budgets section of the AWS Cost Management console. You will see a budget named for your cluster.

Pulls by tagged resources with the cluster name tag key. Good for looking for orphaned resources, or just getting a quick report of costs.

AWS_PROFILE=daylily-service-lsmc bin/generate-report-of-aws-tagged-resources.py -h

usage: generate-report-of-aws-tagged-resources.py [-h] [--tag-key TAG_KEY] [--since SINCE] [--until UNTIL]

[--metric {AmortizedCost,UnblendedCost,NetAmortizedCost,NetUnblendedCost}] [--top-n TOP_N]

[--budget-name BUDGET_NAME] [--profile PROFILE] [--region REGION]

[--show-services SHOW_SERVICES] [--exclude-services EXCLUDE_SERVICES] [--only-show-active]...

AWS_PROFILE=daylily-service-lsmc bin/generate-report-of-aws-tagged-resources.py --only-show-active

You can monitor the health of your cluster via SNS notifications. These are created automatically when you create a cluster via the daylily-create-ephemeral-cluster script. You can subscribe to the topic via email, sms, or other methods. You will receive notifications while pricey tagged resources remain active (most importantly, FSx filesystems and EBS volumes which can be set not to delete upon cluster termination, and which can become expensive to sit idle).

named in honor of Margaret Oakley Dahoff