|

1 | 1 | # README |

2 | 2 |

|

3 | | -## Table of contents |

4 | | - |

5 | | -<p align="center"> |

6 | | - <a href="#project-description">Project description</a> • |

7 | | - <a href="#who-this-project-is-for">Who this project is for</a> • |

8 | | - <a href="#project-dependencies">Project dependencies</a> • |

9 | | - <a href="#instructions-for-use">Instructions for use</a> • |

10 | | - <a href="#contributing-guidelines">Contributing guidelines</a> • |

11 | | - <a href="#additional-documentation">Additional documentation</a> • |

12 | | - <a href="#how-to-get-help">How to get help</a> • |

13 | | - <a href="#terms-of-use">Terms of use</a> |

14 | | -</p> |

15 | | -<hr> |

16 | | - |

17 | 3 | ## Project description |

18 | 4 |

|

19 | | -Pydurma creates a clean e-text version of a Tibetan work from multiple flawed sources. |

20 | | - |

21 | | -Benefits include: |

22 | | - |

23 | | -- Automatic proofreading of Tibetan e-texts |

24 | | -- Creating high-quality e-texts from low-quality sources |

25 | | -- Doesn't require a spell checker (which doesn't exist yet for Tibetan language) |

26 | | - |

27 | | -Pydurma uses a weighted majority algorithm that compares versions of the work syllable-by-syllable and chooses the most common character from among the versions in each position of the text. Since mistakes—whether made during the woodblock carving, hand copying, digital text inputting, or OCRing process—are unlikely to be the same in the majority of the versions, they are unlikely to outrank the correct characters in any given position of the text. The result is a new clean version called a "vulgate edition." |

28 | | - |

29 | | -### Uncritical editions or vulgates |

30 | | - |

31 | | -**vulgate** (noun) /ˈvəl-ˌgāt/ or /ˈvʌlɡeɪt/: *2. a commonly accepted text or reading.* |

32 | | - |

33 | | -Medieval Latin *vulgata*, from Late Latin *vulgata editio*: edition in general circulation. |

34 | | - |

35 | | -“Vulgate.” Merriam-Webster.com Dictionary, Merriam-Webster, https://www.merriam-webster.com/dictionary/vulgate. Accessed 23 Dec. 2022. |

36 | | - |

37 | | -This less common sense of the term *vulgate* represents the objective of this project. |

38 | | - |

39 | | -## Who this project is for |

40 | | - |

41 | | -This project is intended for: |

42 | | - |

43 | | -- Publishers who need clean copies of texts to publish books |

44 | | -- Developers who need clean data to train AI models |

45 | | -- Anyone who needs to proofread a Tibetan text and has access to multiple versions, such as in the [BDRC library](https://library.bdrc.io). |

46 | | - |

47 | | -## Dependencies |

48 | | - |

49 | | -Before you start, ensure you've installed: |

| 5 | +Pydurma is a fast and modular collation engine that: |

| 6 | +- uses a very fast collation mechanism ([diff-match-patch](https://github.com/google/diff-match-patch) instead of [Needleman-Wunsch](https://en.wikipedia.org/wiki/Needleman%E2%80%93Wunsch_algorithm)) |

| 7 | +- makes it easy to define language-specific tokenization and normalization, necessary for languages like Tibetan |

| 8 | +- keeps track of the character position in the original files (even XML files where markup is removed in normalization) |

| 9 | +- has a configurable engine to select the best reading, based on reading frequency among versions, OCR confidence index, language-specific knowledge, etc. |

50 | 10 |

|

51 | | -- python >= 3.7 |

52 | | -- [openpecha](https://github.com/OpenPecha/Toolkit) |

53 | | -- regex |

54 | | -- fast-diff-match-patch |

| 11 | +It does not: |

| 12 | +- use any non-tabular (graph) representation (à la CollateX) |

| 13 | +- implement reajustments of alignment based on the distance between tokens (à la CollateX) |

| 14 | +- detect [transpositions](http://multiversiondocs.blogspot.com/2008/10/transpositions.html) |

55 | 15 |

|

56 | | -## Requirements |

| 16 | +It does not yet: |

| 17 | +- use subword tokenizers à la [sentencepiece](https://github.com/google/sentencepiece), potentially more robust on dirty (OCR) data than those based on linguistic features (spaces punctuation, etc.) |

| 18 | +- allow configurable token distance function based on language-specific knowledge (graphical closeness, phonetic closeness) |

| 19 | +- implement STAR algorithm to find the best "base" between different editions |

57 | 20 |

|

58 | | -To create a vulgate edition, you'll need: |

| 21 | +We intend Pydurma to be used in large scale projects to automate: |

| 22 | +- merging different OCR outputs of the same images, selecting the best version of each of them |

| 23 | +- creating an edition that averages all other editions automatically |

59 | 24 |

|

60 | | -- A reference pecha in the [OPF format](https://openpecha.org/data/opf-format/) |

61 | | -- Several witness pechas in the OPF format (a witness is a version of a text) |

| 25 | +The name Pydurma is a combination of: |

| 26 | +- *Python* |

| 27 | +- *Pedurma* དཔེ་བསྡུར་མ།, Tibetan for critical or diplomatic edition |

62 | 28 |

|

63 | | -> **Note** To convert files into the OPF format, use [OpenPecha Tools](https://github.com/OpenPecha/Toolkit). |

64 | | -> |

65 | | -> You can also convert scanned texts in the [BDRC library](https://library.bdrc.io) to the OPF format with the [OCR Pipeline](https://tools.openpecha.org/ocr/). |

66 | | -> |

67 | | -> To test Pydurma, you can also use the OPF files in the [text folder](https://github.com/OpenPecha/fast-collation-tools/tree/main/tests) in this repo. |

| 29 | +### Note on possible workflows based on Pydurma |

68 | 30 |

|

69 | | -## Instructions for use |

| 31 | +While Pydurma can be used in a classical [Lachmann](https://en.wikipedia.org/wiki/Karl_Lachmann)ian critical edition process, its innovative design allows it to automate the process of variant selection. |

70 | 32 |

|

71 | | -### Configure Pydurma |

| 33 | +Using this automated selection directly can easily be gasped at, but we want to defend this concept. The type of editions Pydurma can produce: |

| 34 | +- are fully automatic and thus uncritical (*uncritical editions*?), but can be produced on a large scale (*industrial editions*?) |

| 35 | +- do not try to reproduce one variant in particular as their base (in that sense are not *diplomatic editions*, perhaps *undiplomatic editions*?) |

| 36 | +- are intended to be similar to the concept of *vulgate* ("a commonly accepted text or reading" [MW](https://www.merriam-webster.com/dictionary/vulgate)) |

| 37 | +- optimize measurable linguistic soundness |

| 38 | +- as a result, are a good base for an AI-ready corpus |

72 | 39 |

|

73 | | -Assuming you've installed the software above and have OPF files: |

74 | | - |

75 | | -1. Clone this repo. |

76 | | -1. Add witnesses in the OPF format into your cloned repo. |

77 | | -1. Open `vulgatizer_op_ocr.py` in a code editor. |

78 | | -1. Update the paths to the actual witness folders in this code block: |

79 | | - |

80 | | -``` |

81 | | -def test_merger(): |

82 | | - op_output = OpenPechaFS("ITEST.opf") |

83 | | - vulgatizer = VulgatizerOPTibOCR(op_output) |

84 | | - vulgatizer.add_op_witness(OpenPechaFS("./test/opfs/I001/I001.opf")) |

85 | | - vulgatizer.add_op_witness(OpenPechaFS("./test/opfs/I002/I002.opf")) |

86 | | - vulgatizer.add_op_witness(OpenPechaFS("./test/opfs/I003/I003.opf")) |

87 | | - vulgatizer.create_vulgate() |

88 | | -``` |

89 | | - |

90 | | -### Run Pydurma |

91 | | - |

92 | | -- Run `vulgatizer_op_ocr.py` |

93 | | - |

94 | | -The vulgate edition OPF will be saved in `./data/opfs/generic_editions`. |

95 | | - |

96 | | -### Limitations |

97 | | - |

98 | | -- No code to detect [transpositions](http://multiversiondocs.blogspot.com/2008/10/transpositions.html). |

| 40 | +A workflow using Pydurma can work on a very large scale with minimal human intervention, which we hope can be a game changer for under-resourced literary traditions like the Tibetan tradition. |

99 | 41 |

|

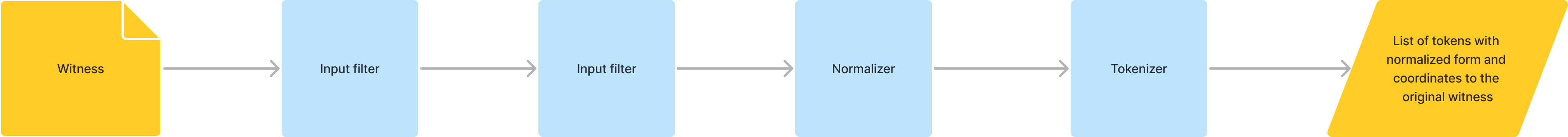

100 | 42 | ## Pydurma workflow |

101 | 43 |

|

102 | | -Pydurma creates common spell editions in three steps: |

| 44 | +Pydurma operates in three steps: |

103 | 45 |

|

104 | 46 | - Preprocessing |

105 | | -- Alignment |

106 | | -- Vulgatization |

| 47 | +- Collation |

| 48 | +- Variant Selection |

107 | 49 |

|

108 | 50 | Here is that process: |

109 | 51 | ### Preprocessing |

110 | 52 |

|

111 | 53 |  |

112 | 54 |

|

113 | | -### Alignment |

| 55 | +### Collation |

114 | 56 |

|

115 | 57 |  |

116 | 58 |

|

117 | | -### Vulgatization |

| 59 | +### Variant Selection |

118 | 60 |

|

119 | 61 |  |

120 | 62 |

|

@@ -144,17 +86,19 @@ Here is that process: |

144 | 86 | - [Needleman–Wunsch algorithm](https://en.wikipedia.org/wiki/Needleman%E2%80%93Wunsch_algorithm) |

145 | 87 | - [Spencer 2004](http://dx.doi.org/10.1007/s10579-004-8682-1): Spencer M., Howe and Christopher J., 2004. Collating Texts Using Progressive Multiple Alignment. Computers and the Humanities. 38/2004, 253–270. |

146 | 88 |

|

147 | | - |

148 | | -## Contributing guidelines |

149 | | - |

150 | | -If you'd like to help out, check out our [contributing guidelines](/CONTRIBUTING.md). |

151 | | - |

152 | 89 | ## Need help? |

153 | 90 |

|

154 | | -- File an [issue](https://github.com/OpenPecha/Pydurma/issues/new). |

155 | | -- Join our [Discord](https://discord.com/invite/7GFpPFSTeA) and ask us there. |

156 | | -- Email us at openpecha[at]gmail[dot]com. |

| 91 | +- File an [issue](https://github.com/buda-base/Pydurma/issues/new) |

| 92 | +- Join our [Discord](https://discord.com/invite/7GFpPFSTeA) and ask us there |

157 | 93 |

|

158 | 94 | ## Terms of use |

159 | 95 |

|

160 | 96 | Pydurma is licensed under the [Apache license](/LICENSE.md). |

| 97 | + |

| 98 | +## Acknowledgements and citation |

| 99 | + |

| 100 | +Pydurma is a creation of: |

| 101 | +- the [Buddhist Digital Resource Center](https://www.bdrc.io/) |

| 102 | +- [OpenPecha](https://github.com/OpenPecha/) |

| 103 | + |

| 104 | +The intended use of Pydurma at the Buddhist Digital Resource Center was presented at the *Digital Humanities Workshop & Symposium* organized in January 2023 at the University of Hamburg (see [summary of the symposium](https://www.kc-tbts.uni-hamburg.de/events/2023-01-14-dh-symposium-completed.html), slide selection available [here](https://drive.google.com/file/d/11WI8v-2mJVBqf2g5GOGCIIjwu1truISb/view?usp=sharing)). |

0 commit comments