diff --git a/App.go b/App.go

index 4a8a8ca11d..531d1ef46a 100644

--- a/App.go

+++ b/App.go

@@ -35,6 +35,7 @@ import (

"github.com/devtron-labs/devtron/pkg/auth/user"

"github.com/casbin/casbin"

+ casbinv2 "github.com/casbin/casbin/v2"

authMiddleware "github.com/devtron-labs/authenticator/middleware"

"github.com/devtron-labs/devtron/api/router"

"github.com/devtron-labs/devtron/api/sse"

@@ -50,6 +51,7 @@ type App struct {

Logger *zap.SugaredLogger

SSE *sse.SSE

Enforcer *casbin.SyncedEnforcer

+ EnforcerV2 *casbinv2.SyncedEnforcer

server *http.Server

db *pg.DB

posthogClient *telemetry.PosthogClient

@@ -74,6 +76,7 @@ func NewApp(router *router.MuxRouter,

centralEventProcessor *eventProcessor.CentralEventProcessor,

pubSubClient *pubsub.PubSubClientServiceImpl,

workflowEventProcessorImpl *in.WorkflowEventProcessorImpl,

+ enforcerV2 *casbinv2.SyncedEnforcer,

) *App {

//check argo connection

//todo - check argo-cd version on acd integration installation

@@ -82,6 +85,7 @@ func NewApp(router *router.MuxRouter,

Logger: Logger,

SSE: sse,

Enforcer: enforcer,

+ EnforcerV2: enforcerV2,

db: db,

serveTls: false,

sessionManager2: sessionManager2,

diff --git a/api/auth/user/wire_user.go b/api/auth/user/wire_user.go

index 77e414b683..6c421698d5 100644

--- a/api/auth/user/wire_user.go

+++ b/api/auth/user/wire_user.go

@@ -56,7 +56,7 @@ var UserWireSet = wire.NewSet(

casbin.NewEnforcerImpl,

wire.Bind(new(casbin.Enforcer), new(*casbin.EnforcerImpl)),

- casbin.Create,

+ casbin.Create, casbin.CreateV2,

user2.NewUserCommonServiceImpl,

wire.Bind(new(user2.UserCommonService), new(*user2.UserCommonServiceImpl)),

diff --git a/cmd/external-app/wire_gen.go b/cmd/external-app/wire_gen.go

index 3e303d78ad..0994cbd0fe 100644

--- a/cmd/external-app/wire_gen.go

+++ b/cmd/external-app/wire_gen.go

@@ -145,7 +145,11 @@ func InitializeApp() (*App, error) {

if err != nil {

return nil, err

}

- enforcerImpl, err := casbin.NewEnforcerImpl(syncedEnforcer, sessionManager, sugaredLogger)

+ casbinSyncedEnforcer, err := casbin.CreateV2()

+ if err != nil {

+ return nil, err

+ }

+ enforcerImpl, err := casbin.NewEnforcerImpl(syncedEnforcer, casbinSyncedEnforcer, sessionManager, sugaredLogger)

if err != nil {

return nil, err

}

diff --git a/env_gen.md b/env_gen.md

index fc828f3832..1ed120c5f6 100644

--- a/env_gen.md

+++ b/env_gen.md

@@ -246,6 +246,7 @@

| USE_BLOB_STORAGE_CONFIG_IN_CI_WORKFLOW | true | |

| USE_BUILDX | false | |

| USE_CUSTOM_HTTP_TRANSPORT | false | |

+ | USE_CASBIN_V2 | false | |

| USE_EXTERNAL_NODE | false | |

| USE_GIT_CLI | false | |

| USE_IMAGE_TAG_FROM_GIT_PROVIDER_FOR_TAG_BASED_BUILD | false | |

diff --git a/go.mod b/go.mod

index 78d3ba79bb..91835940e1 100644

--- a/go.mod

+++ b/go.mod

@@ -15,6 +15,7 @@ require (

github.com/caarlos0/env v3.5.0+incompatible

github.com/caarlos0/env/v6 v6.7.2

github.com/casbin/casbin v1.9.1

+ github.com/casbin/casbin/v2 v2.97.0

github.com/casbin/xorm-adapter v1.0.1-0.20190716004226-a317737a1007

github.com/coreos/go-oidc v2.2.1+incompatible

github.com/davecgh/go-spew v1.1.1

@@ -128,6 +129,8 @@ require (

github.com/bmatcuk/doublestar/v4 v4.6.0 // indirect

github.com/bombsimon/logrusr/v2 v2.0.1 // indirect

github.com/bradleyfalzon/ghinstallation/v2 v2.5.0 // indirect

+ github.com/casbin/govaluate v1.1.0 // indirect

+ github.com/casbin/xorm-adapter/v2 v2.5.1 // indirect

github.com/cenkalti/backoff/v4 v4.2.1 // indirect

github.com/cespare/xxhash/v2 v2.2.0 // indirect

github.com/chai2010/gettext-go v1.0.2 // indirect

@@ -158,6 +161,7 @@ require (

github.com/go-xorm/xorm v0.7.9 // indirect

github.com/gobwas/glob v0.2.3 // indirect

github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da // indirect

+ github.com/golang/snappy v0.0.4 // indirect

github.com/google/btree v1.1.2 // indirect

github.com/google/gnostic v0.6.9 // indirect

github.com/google/go-github/v53 v53.0.0 // indirect

@@ -234,6 +238,7 @@ require (

github.com/spf13/pflag v1.0.5 // indirect

github.com/stoewer/go-strcase v1.2.0 // indirect

github.com/stretchr/objx v0.5.0 // indirect

+ github.com/syndtr/goleveldb v1.0.0 // indirect

github.com/tidwall/match v1.1.1 // indirect

github.com/tidwall/pretty v1.2.0 // indirect

github.com/valyala/bytebufferpool v1.0.0 // indirect

@@ -291,8 +296,9 @@ require (

sigs.k8s.io/kustomize/kyaml v0.14.3-0.20230601165947-6ce0bf390ce3 // indirect

sigs.k8s.io/structured-merge-diff/v4 v4.4.1 // indirect

upper.io/db.v3 v3.8.0+incompatible // indirect

- xorm.io/builder v0.3.6 // indirect

+ xorm.io/builder v0.3.7 // indirect

xorm.io/core v0.7.2 // indirect

+ xorm.io/xorm v1.0.3 // indirect

)

replace (

diff --git a/go.sum b/go.sum

index f7f393930c..d6f609c200 100644

--- a/go.sum

+++ b/go.sum

@@ -14,6 +14,7 @@ cloud.google.com/go/storage v1.30.1 h1:uOdMxAs8HExqBlnLtnQyP0YkvbiDpdGShGKtx6U/o

cloud.google.com/go/storage v1.30.1/go.mod h1:NfxhC0UJE1aXSx7CIIbCf7y9HKT7BiccwkR7+P7gN8E=

dario.cat/mergo v1.0.0 h1:AGCNq9Evsj31mOgNPcLyXc+4PNABt905YmuqPYYpBWk=

dario.cat/mergo v1.0.0/go.mod h1:uNxQE+84aUszobStD9th8a29P2fMDhsBdgRYvZOxGmk=

+gitea.com/xorm/sqlfiddle v0.0.0-20180821085327-62ce714f951a/go.mod h1:EXuID2Zs0pAQhH8yz+DNjUbjppKQzKFAn28TMYPB6IU=

github.com/Azure/azure-pipeline-go v0.2.3 h1:7U9HBg1JFK3jHl5qmo4CTZKFTVgMwdFHMVtCdfBE21U=

github.com/Azure/azure-pipeline-go v0.2.3/go.mod h1:x841ezTBIMG6O3lAcl8ATHnsOPVl2bqk7S3ta6S6u4k=

github.com/Azure/azure-storage-blob-go v0.12.0 h1:7bFXA1QB+lOK2/ASWHhp6/vnxjaeeZq6t8w1Jyp0Iaw=

@@ -63,6 +64,7 @@ github.com/Pallinder/go-randomdata v1.2.0/go.mod h1:yHmJgulpD2Nfrm0cR9tI/+oAgRqC

github.com/ProtonMail/go-crypto v0.0.0-20230217124315-7d5c6f04bbb8/go.mod h1:I0gYDMZ6Z5GRU7l58bNFSkPTFN6Yl12dsUlAZ8xy98g=

github.com/ProtonMail/go-crypto v0.0.0-20230828082145-3c4c8a2d2371 h1:kkhsdkhsCvIsutKu5zLMgWtgh9YxGCNAw8Ad8hjwfYg=

github.com/ProtonMail/go-crypto v0.0.0-20230828082145-3c4c8a2d2371/go.mod h1:EjAoLdwvbIOoOQr3ihjnSoLZRtE8azugULFRteWMNc0=

+github.com/PuerkitoBio/goquery v1.5.1/go.mod h1:GsLWisAFVj4WgDibEWF4pvYnkVQBpKBKeU+7zCJoLcc=

github.com/PuerkitoBio/purell v1.1.1/go.mod h1:c11w/QuzBsJSee3cPx9rAFu61PvFxuPbtSwDGJws/X0=

github.com/PuerkitoBio/urlesc v0.0.0-20170810143723-de5bf2ad4578/go.mod h1:uGdkoq3SwY9Y+13GIhn11/XLaGBb4BfwItxLd5jeuXE=

github.com/Shopify/sarama v1.19.0/go.mod h1:FVkBWblsNy7DGZRfXLU0O9RCGt5g3g3yEuWXgklEdEo=

@@ -75,6 +77,7 @@ github.com/alicebob/gopher-json v0.0.0-20200520072559-a9ecdc9d1d3a h1:HbKu58rmZp

github.com/alicebob/gopher-json v0.0.0-20200520072559-a9ecdc9d1d3a/go.mod h1:SGnFV6hVsYE877CKEZ6tDNTjaSXYUk6QqoIK6PrAtcc=

github.com/alicebob/miniredis/v2 v2.30.3 h1:hrqDB4cHFSHQf4gO3xu6YKQg8PqJpNjLYsQAFYHstqw=

github.com/alicebob/miniredis/v2 v2.30.3/go.mod h1:b25qWj4fCEsBeAAR2mlb0ufImGC6uH3VlUfb/HS5zKg=

+github.com/andybalholm/cascadia v1.1.0/go.mod h1:GsXiBklL0woXo1j/WYWtSYYC4ouU9PqHO0sqidkEA4Y=

github.com/anmitsu/go-shlex v0.0.0-20200514113438-38f4b401e2be h1:9AeTilPcZAjCFIImctFaOjnTIavg87rW78vTPkQqLI8=

github.com/anmitsu/go-shlex v0.0.0-20200514113438-38f4b401e2be/go.mod h1:ySMOLuWl6zY27l47sB3qLNK6tF2fkHG55UZxx8oIVo4=

github.com/antihax/optional v1.0.0/go.mod h1:uupD/76wgC+ih3iEmQUL+0Ugr19nfwCT1kdvxnR2qWY=

@@ -135,8 +138,15 @@ github.com/caarlos0/env/v6 v6.7.2 h1:Jiy2dBHvNgCfNGMP0hOZW6jHUbiENvP+VWDtLz4n1Kg

github.com/caarlos0/env/v6 v6.7.2/go.mod h1:FE0jGiAnQqtv2TenJ4KTa8+/T2Ss8kdS5s1VEjasoN0=

github.com/casbin/casbin v1.9.1 h1:ucjbS5zTrmSLtH4XogqOG920Poe6QatdXtz1FEbApeM=

github.com/casbin/casbin v1.9.1/go.mod h1:z8uPsfBJGUsnkagrt3G8QvjgTKFMBJ32UP8HpZllfog=

+github.com/casbin/casbin/v2 v2.28.3/go.mod h1:vByNa/Fchek0KZUgG5wEsl7iFsiviAYKRtgrQfcJqHg=

+github.com/casbin/casbin/v2 v2.97.0 h1:FFHIzY+6fLIcoAB/DKcG5xvscUo9XqRpBniRYhlPWkg=

+github.com/casbin/casbin/v2 v2.97.0/go.mod h1:jX8uoN4veP85O/n2674r2qtfSXI6myvxW85f6TH50fw=

+github.com/casbin/govaluate v1.1.0 h1:6xdCWIpE9CwHdZhlVQW+froUrCsjb6/ZYNcXODfLT+E=

+github.com/casbin/govaluate v1.1.0/go.mod h1:G/UnbIjZk/0uMNaLwZZmFQrR72tYRZWQkO70si/iR7A=

github.com/casbin/xorm-adapter v1.0.1-0.20190716004226-a317737a1007 h1:KEBrEhQjSCzUt5bQKxX8ZbS3S46sRnzOmwemTOu+LLQ=

github.com/casbin/xorm-adapter v1.0.1-0.20190716004226-a317737a1007/go.mod h1:6sy40UQdWR0blO1DJdEzbcu6rcEW89odCMcEdoB1qdM=

+github.com/casbin/xorm-adapter/v2 v2.5.1 h1:BkpIxRHKa0s3bSMx173PpuU7oTs+Zw7XmD0BIta0HGM=

+github.com/casbin/xorm-adapter/v2 v2.5.1/go.mod h1:AeH4dBKHC9/zYxzdPVHhPDzF8LYLqjDdb767CWJoV54=

github.com/cenkalti/backoff/v4 v4.2.1 h1:y4OZtCnogmCPw98Zjyt5a6+QwPLGkiQsYW5oUqylYbM=

github.com/cenkalti/backoff/v4 v4.2.1/go.mod h1:Y3VNntkOUPxTVeUxJ/G5vcM//AlwfmyYozVcomhLiZE=

github.com/census-instrumentation/opencensus-proto v0.2.1/go.mod h1:f6KPmirojxKA12rnyqOA5BBL4O983OfeGPqjHWSTneU=

@@ -183,6 +193,7 @@ github.com/deckarep/golang-set v1.8.0 h1:sk9/l/KqpunDwP7pSjUg0keiOOLEnOBHzykLrsP

github.com/deckarep/golang-set v1.8.0/go.mod h1:5nI87KwE7wgsBU1F4GKAw2Qod7p5kyS383rP6+o6qqo=

github.com/denisenkom/go-mssqldb v0.0.0-20190707035753-2be1aa521ff4 h1:YcpmyvADGYw5LqMnHqSkyIELsHCGF6PkrmM31V8rF7o=

github.com/denisenkom/go-mssqldb v0.0.0-20190707035753-2be1aa521ff4/go.mod h1:zAg7JM8CkOJ43xKXIj7eRO9kmWm/TW578qo+oDO6tuM=

+github.com/denisenkom/go-mssqldb v0.0.0-20200428022330-06a60b6afbbc/go.mod h1:xbL0rPBG9cCiLr28tMa8zpbdarY27NDyej4t/EjAShU=

github.com/devtron-labs/authenticator v0.4.35-0.20240607135426-c86e868ecee1 h1:qdkpTAo2Kr0ZicZIVXfNwsGSshpc9OB9j9RzmKYdIwY=

github.com/devtron-labs/authenticator v0.4.35-0.20240607135426-c86e868ecee1/go.mod h1:IkKPPEfgLCMR29he5yv2OCC6iM2R7K5/0AA3k8b9XNc=

github.com/devtron-labs/common-lib v0.0.21-0.20240628105542-603b4f777e00 h1:xSZulEz0PaTA7tL4Es/uNFUmgjD6oAv8gxJV49GPWHk=

@@ -299,6 +310,7 @@ github.com/go-redis/cache/v9 v9.0.0/go.mod h1:cMwi1N8ASBOufbIvk7cdXe2PbPjK/WMRL9

github.com/go-resty/resty/v2 v2.7.0 h1:me+K9p3uhSmXtrBZ4k9jcEAfJmuC8IivWHwaLZwPrFY=

github.com/go-resty/resty/v2 v2.7.0/go.mod h1:9PWDzw47qPphMRFfhsyk0NnSgvluHcljSMVIq3w7q0I=

github.com/go-sql-driver/mysql v1.4.1/go.mod h1:zAC/RDZ24gD3HViQzih4MyKcchzm+sOG5ZlKdlhCg5w=

+github.com/go-sql-driver/mysql v1.5.0/go.mod h1:DCzpHaOWr8IXmIStZouvnhqoel9Qv2LBy8hT2VhHyBg=

github.com/go-sql-driver/mysql v1.6.0 h1:BCTh4TKNUYmOmMUcQ3IipzF5prigylS7XXjEkfCHuOE=

github.com/go-sql-driver/mysql v1.6.0/go.mod h1:DCzpHaOWr8IXmIStZouvnhqoel9Qv2LBy8hT2VhHyBg=

github.com/go-stack/stack v1.8.0/go.mod h1:v0f6uXyyMGvRgIKkXu+yp6POWl0qKG85gN/melR3HDY=

@@ -319,6 +331,7 @@ github.com/gogo/protobuf v1.3.2/go.mod h1:P1XiOD3dCwIKUDQYPy72D8LYyHL2YPYrpS2s69

github.com/golang-jwt/jwt/v4 v4.0.0/go.mod h1:/xlHOz8bRuivTWchD4jCa+NbatV+wEUSzwAxVc6locg=

github.com/golang-jwt/jwt/v4 v4.5.0 h1:7cYmW1XlMY7h7ii7UhUyChSgS5wUJEnm9uZVTGqOWzg=

github.com/golang-jwt/jwt/v4 v4.5.0/go.mod h1:m21LjoU+eqJr34lmDMbreY2eSTRJ1cv77w39/MY0Ch0=

+github.com/golang-sql/civil v0.0.0-20190719163853-cb61b32ac6fe/go.mod h1:8vg3r2VgvsThLBIFL93Qb5yWzgyZWhEmBwUJWevAkK0=

github.com/golang/glog v0.0.0-20160126235308-23def4e6c14b/go.mod h1:SBH7ygxi8pfUlaOkMMuAQtPIUF8ecWP5IEl/CR7VP2Q=

github.com/golang/glog v1.1.2 h1:DVjP2PbBOzHyzA+dn3WhHIq4NdVu3Q+pvivFICf/7fo=

github.com/golang/glog v1.1.2/go.mod h1:zR+okUeTbrL6EL3xHUDxZuEtGv04p5shwip1+mL/rLQ=

@@ -328,6 +341,7 @@ github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da h1:oI5xCqsCo564l

github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da/go.mod h1:cIg4eruTrX1D+g88fzRXU5OdNfaM+9IcxsU14FzY7Hc=

github.com/golang/mock v1.1.1/go.mod h1:oTYuIxOrZwtPieC+H1uAHpcLFnEyAGVDL/k47Jfbm0A=

github.com/golang/mock v1.2.0/go.mod h1:oTYuIxOrZwtPieC+H1uAHpcLFnEyAGVDL/k47Jfbm0A=

+github.com/golang/mock v1.4.4/go.mod h1:l3mdAwkq5BuhzHwde/uurv3sEJeZMXNpwsxVWU71h+4=

github.com/golang/mock v1.6.0 h1:ErTB+efbowRARo13NNdxyJji2egdxLGQhRaY+DUumQc=

github.com/golang/mock v1.6.0/go.mod h1:p6yTPP+5HYm5mzsMV8JkE6ZKdX+/wYM6Hr+LicevLPs=

github.com/golang/protobuf v1.1.0/go.mod h1:6lQm79b+lXiMfvg/cZm0SGofjICqVBUtrP5yJMmIC1U=

@@ -349,6 +363,8 @@ github.com/golang/protobuf v1.5.3/go.mod h1:XVQd3VNwM+JqD3oG2Ue2ip4fOMUkwXdXDdiu

github.com/golang/protobuf v1.5.4 h1:i7eJL8qZTpSEXOPTxNKhASYpMn+8e5Q6AdndVa1dWek=

github.com/golang/protobuf v1.5.4/go.mod h1:lnTiLA8Wa4RWRcIUkrtSVa5nRhsEGBg48fD6rSs7xps=

github.com/golang/snappy v0.0.0-20180518054509-2e65f85255db/go.mod h1:/XxbfmMg8lxefKM7IXC3fBNl/7bRcc72aCRzEWrmP2Q=

+github.com/golang/snappy v0.0.4 h1:yAGX7huGHXlcLOEtBnF4w7FQwA26wojNCwOYAEhLjQM=

+github.com/golang/snappy v0.0.4/go.mod h1:/XxbfmMg8lxefKM7IXC3fBNl/7bRcc72aCRzEWrmP2Q=

github.com/google/btree v0.0.0-20180813153112-4030bb1f1f0c/go.mod h1:lNA+9X1NB3Zf8V7Ke586lFgjr2dZNuvo3lPJSGZ5JPQ=

github.com/google/btree v1.0.1/go.mod h1:xXMiIv4Fb/0kKde4SpL7qlzvu5cMJDRkFDxJfI9uaxA=

github.com/google/btree v1.1.2 h1:xf4v41cLI2Z6FxbKm+8Bu+m8ifhj15JuZ9sa0jZCMUU=

@@ -553,6 +569,8 @@ github.com/kylelemons/godebug v1.1.0/go.mod h1:9/0rRGxNHcop5bhtWyNeEfOS8JIWk580+

github.com/leodido/go-urn v1.2.0 h1:hpXL4XnriNwQ/ABnpepYM/1vCLWNDfUNts8dX3xTG6Y=

github.com/leodido/go-urn v1.2.0/go.mod h1:+8+nEpDfqqsY+g338gtMEUOtuK+4dEMhiQEgxpxOKII=

github.com/lib/pq v1.0.0/go.mod h1:5WUZQaWbwv1U+lTReE5YruASi9Al49XbQIvNi/34Woo=

+github.com/lib/pq v1.7.0/go.mod h1:AlVN5x4E4T544tWzH6hKfbfQvm3HdbOxrmggDNAPY9o=

+github.com/lib/pq v1.8.0/go.mod h1:AlVN5x4E4T544tWzH6hKfbfQvm3HdbOxrmggDNAPY9o=

github.com/lib/pq v1.10.9 h1:YXG7RB+JIjhP29X+OtkiDnYaXQwpS4JEWq7dtCCRUEw=

github.com/lib/pq v1.10.9/go.mod h1:AlVN5x4E4T544tWzH6hKfbfQvm3HdbOxrmggDNAPY9o=

github.com/liggitt/tabwriter v0.0.0-20181228230101-89fcab3d43de h1:9TO3cAIGXtEhnIaL+V+BEER86oLrvS+kWobKpbJuye0=

@@ -577,6 +595,7 @@ github.com/mattn/go-isatty v0.0.0-20160806122752-66b8e73f3f5c/go.mod h1:M+lRXTBq

github.com/mattn/go-isatty v0.0.3/go.mod h1:M+lRXTBqGeGNdLjl/ufCoiOlB5xdOkqRJdNxMWT7Zi4=

github.com/mattn/go-sqlite3 v1.10.0 h1:jbhqpg7tQe4SupckyijYiy0mJJ/pRyHvXf7JdWK860o=

github.com/mattn/go-sqlite3 v1.10.0/go.mod h1:FPy6KqzDD04eiIsT53CuJW3U88zkxoIYsOqkbpncsNc=

+github.com/mattn/go-sqlite3 v1.14.0/go.mod h1:JIl7NbARA7phWnGvh0LKTyg7S9BA+6gx71ShQilpsus=

github.com/matttproud/golang_protobuf_extensions v1.0.1/go.mod h1:D8He9yQNgCq6Z5Ld7szi9bcBfOoFv/3dc6xSMkL2PC0=

github.com/matttproud/golang_protobuf_extensions v1.0.4 h1:mmDVorXM7PCGKw94cs5zkfA9PSy5pEvNWRP0ET0TIVo=

github.com/matttproud/golang_protobuf_extensions v1.0.4/go.mod h1:BSXmuO+STAnVfrANrmjBb36TMTDstsz7MSK+HVaYKv4=

@@ -774,6 +793,8 @@ github.com/stretchr/testify v1.8.1/go.mod h1:w2LPCIKwWwSfY2zedu0+kehJoqGctiVI29o

github.com/stretchr/testify v1.8.2/go.mod h1:w2LPCIKwWwSfY2zedu0+kehJoqGctiVI29o6fzry7u4=

github.com/stretchr/testify v1.8.4 h1:CcVxjf3Q8PM0mHUKJCdn+eZZtm5yQwehR5yeSVQQcUk=

github.com/stretchr/testify v1.8.4/go.mod h1:sz/lmYIOXD/1dqDmKjjqLyZ2RngseejIcXlSw2iwfAo=

+github.com/syndtr/goleveldb v1.0.0 h1:fBdIW9lB4Iz0n9khmH8w27SJ3QEJ7+IgjPEwGSZiFdE=

+github.com/syndtr/goleveldb v1.0.0/go.mod h1:ZVVdQEZoIme9iO1Ch2Jdy24qqXrMMOU6lpPAyBWyWuQ=

github.com/tidwall/gjson v1.12.1/go.mod h1:/wbyibRr2FHMks5tjHJ5F8dMZh3AcwJEMf5vlfC0lxk=

github.com/tidwall/gjson v1.14.3 h1:9jvXn7olKEHU1S9vwoMGliaT8jq1vJ7IH/n9zD9Dnlw=

github.com/tidwall/gjson v1.14.3/go.mod h1:/wbyibRr2FHMks5tjHJ5F8dMZh3AcwJEMf5vlfC0lxk=

@@ -910,6 +931,7 @@ golang.org/x/mod v0.12.0/go.mod h1:iBbtSCu2XBx23ZKBPSOrRkjjQPZFPuis4dIYUhu/chs=

golang.org/x/mod v0.14.0/go.mod h1:hTbmBsO62+eylJbnUtE2MGJUyE7QWk4xUqPFrRgJ+7c=

golang.org/x/mod v0.15.0 h1:SernR4v+D55NyBH2QiEQrlBAnj1ECL6AGrA5+dPaMY8=

golang.org/x/mod v0.15.0/go.mod h1:hTbmBsO62+eylJbnUtE2MGJUyE7QWk4xUqPFrRgJ+7c=

+golang.org/x/net v0.0.0-20180218175443-cbe0f9307d01/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

golang.org/x/net v0.0.0-20180406214816-61147c48b25b/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

golang.org/x/net v0.0.0-20180724234803-3673e40ba225/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

golang.org/x/net v0.0.0-20180811021610-c39426892332/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

@@ -926,7 +948,9 @@ golang.org/x/net v0.0.0-20190603091049-60506f45cf65/go.mod h1:HSz+uSET+XFnRR8LxR

golang.org/x/net v0.0.0-20190620200207-3b0461eec859/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

golang.org/x/net v0.0.0-20190827160401-ba9fcec4b297/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

golang.org/x/net v0.0.0-20191112182307-2180aed22343/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

+golang.org/x/net v0.0.0-20200202094626-16171245cfb2/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

golang.org/x/net v0.0.0-20200226121028-0de0cce0169b/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

+golang.org/x/net v0.0.0-20200324143707-d3edc9973b7e/go.mod h1:qpuaurCH72eLCgpAm/N6yyVIVM9cpaDIP3A8BGJEC5A=

golang.org/x/net v0.0.0-20200520004742-59133d7f0dd7/go.mod h1:qpuaurCH72eLCgpAm/N6yyVIVM9cpaDIP3A8BGJEC5A=

golang.org/x/net v0.0.0-20200822124328-c89045814202/go.mod h1:/O7V0waA8r7cgGh81Ro3o1hOxt32SMVPicZroKQ2sZA=

golang.org/x/net v0.0.0-20200904194848-62affa334b73/go.mod h1:/O7V0waA8r7cgGh81Ro3o1hOxt32SMVPicZroKQ2sZA=

@@ -1076,6 +1100,7 @@ golang.org/x/tools v0.0.0-20190114222345-bf090417da8b/go.mod h1:n7NCudcB/nEzxVGm

golang.org/x/tools v0.0.0-20190226205152-f727befe758c/go.mod h1:9Yl7xja0Znq3iFh3HoIrodX9oNMXvdceNzlUR8zjMvY=

golang.org/x/tools v0.0.0-20190311212946-11955173bddd/go.mod h1:LCzVGOaR6xXOjkQ3onu1FJEFr0SW1gC7cKk1uF8kGRs=

golang.org/x/tools v0.0.0-20190312170243-e65039ee4138/go.mod h1:LCzVGOaR6xXOjkQ3onu1FJEFr0SW1gC7cKk1uF8kGRs=

+golang.org/x/tools v0.0.0-20190425150028-36563e24a262/go.mod h1:RgjU9mgBXZiqYHBnxXauZ1Gv1EHHAz9KjViQ78xBX0Q=

golang.org/x/tools v0.0.0-20190524140312-2c0ae7006135/go.mod h1:RgjU9mgBXZiqYHBnxXauZ1Gv1EHHAz9KjViQ78xBX0Q=

golang.org/x/tools v0.0.0-20191108193012-7d206e10da11/go.mod h1:b+2E5dAYhXwXZwtnZ6UAqBI28+e2cm9otk0dWdXHAEo=

golang.org/x/tools v0.0.0-20191119224855-298f0cb1881e/go.mod h1:b+2E5dAYhXwXZwtnZ6UAqBI28+e2cm9otk0dWdXHAEo=

@@ -1280,6 +1305,10 @@ upper.io/db.v3 v3.8.0+incompatible h1:XNeEO2vQRVqq70M98ghzq6M30F5Bzo+99ess5v+eVY

upper.io/db.v3 v3.8.0+incompatible/go.mod h1:FgTdD24eBjJAbPKsQSiHUNgXjOR4Lub3u1UMHSIh82Y=

xorm.io/builder v0.3.6 h1:ha28mQ2M+TFx96Hxo+iq6tQgnkC9IZkM6D8w9sKHHF8=

xorm.io/builder v0.3.6/go.mod h1:LEFAPISnRzG+zxaxj2vPicRwz67BdhFreKg8yv8/TgU=

+xorm.io/builder v0.3.7 h1:2pETdKRK+2QG4mLX4oODHEhn5Z8j1m8sXa7jfu+/SZI=

+xorm.io/builder v0.3.7/go.mod h1:aUW0S9eb9VCaPohFCH3j7czOx1PMW3i1HrSzbLYGBSE=

xorm.io/core v0.7.2-0.20190928055935-90aeac8d08eb/go.mod h1:jJfd0UAEzZ4t87nbQYtVjmqpIODugN6PD2D9E+dJvdM=

xorm.io/core v0.7.2 h1:mEO22A2Z7a3fPaZMk6gKL/jMD80iiyNwRrX5HOv3XLw=

xorm.io/core v0.7.2/go.mod h1:jJfd0UAEzZ4t87nbQYtVjmqpIODugN6PD2D9E+dJvdM=

+xorm.io/xorm v1.0.3 h1:3dALAohvINu2mfEix5a5x5ZmSVGSljinoSGgvGbaZp0=

+xorm.io/xorm v1.0.3/go.mod h1:uF9EtbhODq5kNWxMbnBEj8hRRZnlcNSz2t2N7HW/+A4=

diff --git a/pkg/auth/authorisation/casbin/Adapter.go b/pkg/auth/authorisation/casbin/Adapter.go

index 7b3a76b46d..4a99f1499c 100644

--- a/pkg/auth/authorisation/casbin/Adapter.go

+++ b/pkg/auth/authorisation/casbin/Adapter.go

@@ -19,19 +19,31 @@ package casbin

import (

"fmt"

"log"

+ "os"

"strings"

xormadapter "github.com/casbin/xorm-adapter"

+ xormadapter2 "github.com/casbin/xorm-adapter/v2"

"github.com/casbin/casbin"

+ casbinv2 "github.com/casbin/casbin/v2"

"github.com/devtron-labs/devtron/pkg/sql"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

const CasbinDefaultDatabase = "casbin"

+type Version string

+

+const (

+ CasbinV1 Version = "V1"

+ CasbinV2 Version = "V2"

+)

+

var e *casbin.SyncedEnforcer

+var e2 *casbinv2.SyncedEnforcer

var enforcerImplRef *EnforcerImpl

+var casbinVersion Version

type Subject string

type Resource string

@@ -47,7 +59,24 @@ type Policy struct {

Obj Object `json:"obj"`

}

+func isV2() bool {

+ return casbinVersion == CasbinV2

+}

+

+func setCasbinVersion() {

+ version := os.Getenv("USE_CASBIN_V2")

+ if version == "true" {

+ casbinVersion = CasbinV2

+ return

+ }

+ casbinVersion = CasbinV1

+}

+

func Create() (*casbin.SyncedEnforcer, error) {

+ setCasbinVersion()

+ if isV2() {

+ return nil, nil

+ }

metav1.Now()

config, err := sql.GetConfig() //FIXME: use this from wire

if err != nil {

@@ -81,6 +110,47 @@ func Create() (*casbin.SyncedEnforcer, error) {

return e, nil

}

+func CreateV2() (*casbinv2.SyncedEnforcer, error) {

+ setCasbinVersion()

+ if !isV2() {

+ return nil, nil

+ }

+

+ metav1.Now()

+ config, err := sql.GetConfig()

+ if err != nil {

+ log.Println(err)

+ return nil, err

+ }

+ dbSpecified := true

+ if config.CasbinDatabase == CasbinDefaultDatabase {

+ dbSpecified = false

+ }

+ dataSource := fmt.Sprintf("dbname=%s user=%s password=%s host=%s port=%s sslmode=disable", config.CasbinDatabase, config.User, config.Password, config.Addr, config.Port)

+ a, err := xormadapter2.NewAdapter("postgres", dataSource, dbSpecified) // Your driver and data source.

+ if err != nil {

+ log.Println(err)

+ return nil, err

+ }

+ //Adapter

+

+ auth, err1 := casbinv2.NewSyncedEnforcer("./auth_model.conf", a)

+ if err1 != nil {

+ log.Println(err)

+ return nil, err

+ }

+ e2 = auth

+ err = e2.LoadPolicy()

+ if err != nil {

+ log.Println(err)

+ return nil, err

+ }

+ log.Println("v2 casbin Policies Loaded Successfully")

+ //adding our key matching func - MatchKeyFunc, to enforcer

+ e2.AddFunction("matchKeyByPart", MatchKeyByPartFunc)

+ return e2, nil

+}

+

func setEnforcerImpl(ref *EnforcerImpl) {

enforcerImplRef = ref

}

@@ -89,6 +159,7 @@ func AddPolicy(policies []Policy) []Policy {

defer handlePanic()

var failed = []Policy{}

emailIdList := map[string]struct{}{}

+ var err error

for _, p := range policies {

success := false

if strings.ToLower(string(p.Type)) == "p" && p.Sub != "" && p.Res != "" && p.Act != "" && p.Obj != "" {

@@ -96,11 +167,26 @@ func AddPolicy(policies []Policy) []Policy {

res := strings.ToLower(string(p.Res))

act := strings.ToLower(string(p.Act))

obj := strings.ToLower(string(p.Obj))

- success = e.AddPolicy([]string{sub, res, act, obj, "allow"})

+ if isV2() {

+ success, err = e2.AddPolicy([]string{sub, res, act, obj, "allow"})

+ if err != nil {

+ log.Println(err)

+ }

+ } else {

+ success = e.AddPolicy([]string{sub, res, act, obj, "allow"})

+ }

+

} else if strings.ToLower(string(p.Type)) == "g" && p.Sub != "" && p.Obj != "" {

sub := strings.ToLower(string(p.Sub))

obj := strings.ToLower(string(p.Obj))

- success = e.AddGroupingPolicy([]string{sub, obj})

+ if isV2() {

+ success, err = e2.AddGroupingPolicy([]string{sub, obj})

+ if err != nil {

+ log.Println(err)

+ }

+ } else {

+ success = e.AddGroupingPolicy([]string{sub, obj})

+ }

}

if !success {

failed = append(failed, p)

@@ -122,8 +208,6 @@ func LoadPolicy() {

err := enforcerImplRef.ReloadPolicy()

if err != nil {

fmt.Println("error in reloading policies", err)

- } else {

- fmt.Println("policy reloaded successfully")

}

}

@@ -131,12 +215,27 @@ func RemovePolicy(policies []Policy) []Policy {

defer handlePanic()

var failed = []Policy{}

emailIdList := map[string]struct{}{}

+ var err error

for _, p := range policies {

success := false

if strings.ToLower(string(p.Type)) == "p" && p.Sub != "" && p.Res != "" && p.Act != "" && p.Obj != "" {

- success = e.RemovePolicy([]string{strings.ToLower(string(p.Sub)), strings.ToLower(string(p.Res)), strings.ToLower(string(p.Act)), strings.ToLower(string(p.Obj))})

+ if isV2() {

+ success, err = e2.RemovePolicy([]string{strings.ToLower(string(p.Sub)), strings.ToLower(string(p.Res)), strings.ToLower(string(p.Act)), strings.ToLower(string(p.Obj))})

+ if err != nil {

+ log.Println(err)

+ }

+ } else {

+ success = e.RemovePolicy([]string{strings.ToLower(string(p.Sub)), strings.ToLower(string(p.Res)), strings.ToLower(string(p.Act)), strings.ToLower(string(p.Obj))})

+ }

} else if strings.ToLower(string(p.Type)) == "g" && p.Sub != "" && p.Obj != "" {

- success = e.RemoveGroupingPolicy([]string{strings.ToLower(string(p.Sub)), strings.ToLower(string(p.Obj))})

+ if isV2() {

+ success, err = e2.RemoveGroupingPolicy([]string{strings.ToLower(string(p.Sub)), strings.ToLower(string(p.Obj))})

+ if err != nil {

+ log.Println(err)

+ }

+ } else {

+ success = e.RemoveGroupingPolicy([]string{strings.ToLower(string(p.Sub)), strings.ToLower(string(p.Obj))})

+ }

}

if !success {

failed = append(failed, p)

@@ -154,30 +253,61 @@ func RemovePolicy(policies []Policy) []Policy {

}

func GetAllSubjects() []string {

+ if isV2() {

+ subjects, err := e2.GetAllSubjects()

+ if err != nil {

+ log.Println(err)

+ }

+ return subjects

+ }

return e.GetAllSubjects()

}

func DeleteRoleForUser(user string, role string) bool {

user = strings.ToLower(user)

role = strings.ToLower(role)

- response := e.DeleteRoleForUser(user, role)

+ var response bool

+ var err error

+ if isV2() {

+ response, err = e2.DeleteRoleForUser(user, role)

+ if err != nil {

+ log.Println(err)

+ }

+ } else {

+ response = e.DeleteRoleForUser(user, role)

+ }

enforcerImplRef.InvalidateCache(user)

return response

}

func GetRolesForUser(user string) ([]string, error) {

user = strings.ToLower(user)

+ if isV2() {

+ return e2.GetRolesForUser(user)

+ }

return e.GetRolesForUser(user)

}

func GetUserByRole(role string) ([]string, error) {

role = strings.ToLower(role)

+ if isV2() {

+ return e2.GetUsersForRole(role)

+ }

return e.GetUsersForRole(role)

}

func RemovePoliciesByRoles(roles string) bool {

roles = strings.ToLower(roles)

- policyResponse := e.RemovePolicy([]string{roles})

+ var policyResponse bool

+ var err error

+ if isV2() {

+ policyResponse, err = e2.RemovePolicy([]string{roles})

+ if err != nil {

+ log.Println(err)

+ }

+ } else {

+ policyResponse = e.RemovePolicy([]string{roles})

+ }

enforcerImplRef.InvalidateCompleteCache()

return policyResponse

}

@@ -191,8 +321,16 @@ func RemovePoliciesByAllRoles(roles []string) bool {

rolesLower = append(rolesLower, strings.ToLower(role))

}

var policyResponse bool

+ var err error

for _, role := range rolesLower {

- policyResponse = e.RemovePolicy([]string{role})

+ if isV2() {

+ policyResponse, err = e2.RemovePolicy([]string{role})

+ if err != nil {

+ log.Println(err)

+ }

+ } else {

+ policyResponse = e.RemovePolicy([]string{role})

+ }

}

enforcerImplRef.InvalidateCompleteCache()

return policyResponse

diff --git a/pkg/auth/authorisation/casbin/rbac.go b/pkg/auth/authorisation/casbin/rbac.go

index 6158df91fd..4dcfc5efc9 100644

--- a/pkg/auth/authorisation/casbin/rbac.go

+++ b/pkg/auth/authorisation/casbin/rbac.go

@@ -19,6 +19,7 @@ package casbin

import (

"encoding/json"

"fmt"

+ casbinv2 "github.com/casbin/casbin/v2"

"log"

"math"

"strings"

@@ -50,6 +51,7 @@ type Enforcer interface {

func NewEnforcerImpl(

enforcer *casbin.SyncedEnforcer,

+ enforcerV2 *casbinv2.SyncedEnforcer,

sessionManager *middleware.SessionManager,

logger *zap.SugaredLogger) (*EnforcerImpl, error) {

lock := make(map[string]*CacheData)

@@ -59,7 +61,7 @@ func NewEnforcerImpl(

return nil, err

}

enf := &EnforcerImpl{lockCacheData: lock, enforcerRWLock: &sync.RWMutex{}, batchRequestLock: batchRequestLock, enforcerConfig: enforcerConfig,

- Cache: getEnforcerCache(logger, enforcerConfig), SyncedEnforcer: enforcer, logger: logger, SessionManager: sessionManager}

+ Cache: getEnforcerCache(logger, enforcerConfig), Enforcer: enforcer, EnforcerV2: enforcerV2, logger: logger, SessionManager: sessionManager}

setEnforcerImpl(enf)

return enf, nil

}

@@ -74,6 +76,7 @@ type EnforcerConfig struct {

CacheEnabled bool `env:"ENFORCER_CACHE" envDefault:"false"`

CacheExpirationInSecs int `env:"ENFORCER_CACHE_EXPIRATION_IN_SEC" envDefault:"86400"`

EnforcerBatchSize int `env:"ENFORCER_MAX_BATCH_SIZE" envDefault:"1"`

+ UseCasbinV2 bool `env:"USE_CASBIN_V2" envDefault:"false"`

}

func getConfig() (*EnforcerConfig, error) {

@@ -106,7 +109,8 @@ type EnforcerImpl struct {

lockCacheData map[string]*CacheData

batchRequestLock map[string]*sync.Mutex

*cache.Cache

- *casbin.SyncedEnforcer

+ Enforcer *casbin.SyncedEnforcer

+ EnforcerV2 *casbinv2.SyncedEnforcer

*middleware.SessionManager

logger *zap.SugaredLogger

enforcerConfig *EnforcerConfig

@@ -130,7 +134,21 @@ func (e *EnforcerImpl) EnforceInBatch(token string, resource string, action stri

func (e *EnforcerImpl) ReloadPolicy() error {

//e.enforcerRWLock.Lock()

//defer e.enforcerRWLock.Unlock()

- return e.SyncedEnforcer.LoadPolicy()

+ var err error

+ if e.enforcerConfig.UseCasbinV2 {

+ err = e.EnforcerV2.LoadPolicy()

+ if err != nil {

+ return err

+ }

+ fmt.Println("V2 policy reloaded successfully")

+ return nil

+ }

+ err = e.Enforcer.LoadPolicy()

+ if err != nil {

+ return err

+ }

+ fmt.Println("policy reloaded successfully")

+ return nil

}

// EnforceErr is a convenience helper to wrap a failed enforcement with a detailed error about the request

@@ -413,10 +431,20 @@ func (e *EnforcerImpl) enforceAndUpdateCache(email string, resource string, acti

func (e *EnforcerImpl) enforcerEnforce(email string, resource string, action string, resourceItem string) (bool, error) {

//e.enforcerRWLock.RLock()

//defer e.enforcerRWLock.RUnlock()

- response, err := e.SyncedEnforcer.EnforceSafe(email, resource, action, resourceItem)

- if err != nil {

- e.logger.Errorw("error occurred while enforcing safe", "email", email,

- "resource", resource, "action", action, "resourceItem", resourceItem, "reason", err)

+ var response bool

+ var err error

+ if isV2() {

+ response, err = e.EnforcerV2.Enforce(email, resource, action, resourceItem)

+ if err != nil {

+ e.logger.Errorw("error occurred while EnforcerV2 Enforce", "email", email,

+ "resource", resource, "action", action, "resourceItem", resourceItem, "reason", err)

+ }

+ } else {

+ response, err = e.Enforcer.EnforceSafe(email, resource, action, resourceItem)

+ if err != nil {

+ e.logger.Errorw("error occurred while enforcing safe", "email", email,

+ "resource", resource, "action", action, "resourceItem", resourceItem, "reason", err)

+ }

}

return response, err

}

diff --git a/vendor/github.com/casbin/casbin/v2/.gitignore b/vendor/github.com/casbin/casbin/v2/.gitignore

new file mode 100644

index 0000000000..da27805f5b

--- /dev/null

+++ b/vendor/github.com/casbin/casbin/v2/.gitignore

@@ -0,0 +1,30 @@

+# Compiled Object files, Static and Dynamic libs (Shared Objects)

+*.o

+*.a

+*.so

+

+# Folders

+_obj

+_test

+

+# Architecture specific extensions/prefixes

+*.[568vq]

+[568vq].out

+

+*.cgo1.go

+*.cgo2.c

+_cgo_defun.c

+_cgo_gotypes.go

+_cgo_export.*

+

+_testmain.go

+

+*.exe

+*.test

+*.prof

+

+.idea/

+*.iml

+

+# vendor files

+vendor

diff --git a/vendor/github.com/casbin/casbin/v2/.golangci.yml b/vendor/github.com/casbin/casbin/v2/.golangci.yml

new file mode 100644

index 0000000000..b8d3620198

--- /dev/null

+++ b/vendor/github.com/casbin/casbin/v2/.golangci.yml

@@ -0,0 +1,354 @@

+# Based on https://gist.github.com/maratori/47a4d00457a92aa426dbd48a18776322

+# This code is licensed under the terms of the MIT license https://opensource.org/license/mit

+# Copyright (c) 2021 Marat Reymers

+

+## Golden config for golangci-lint v1.56.2

+#

+# This is the best config for golangci-lint based on my experience and opinion.

+# It is very strict, but not extremely strict.

+# Feel free to adapt and change it for your needs.

+

+run:

+ # Timeout for analysis, e.g. 30s, 5m.

+ # Default: 1m

+ timeout: 3m

+

+

+# This file contains only configs which differ from defaults.

+# All possible options can be found here https://github.com/golangci/golangci-lint/blob/master/.golangci.reference.yml

+linters-settings:

+ cyclop:

+ # The maximal code complexity to report.

+ # Default: 10

+ max-complexity: 30

+ # The maximal average package complexity.

+ # If it's higher than 0.0 (float) the check is enabled

+ # Default: 0.0

+ package-average: 10.0

+

+ errcheck:

+ # Report about not checking of errors in type assertions: `a := b.(MyStruct)`.

+ # Such cases aren't reported by default.

+ # Default: false

+ check-type-assertions: true

+

+ exhaustive:

+ # Program elements to check for exhaustiveness.

+ # Default: [ switch ]

+ check:

+ - switch

+ - map

+

+ exhaustruct:

+ # List of regular expressions to exclude struct packages and their names from checks.

+ # Regular expressions must match complete canonical struct package/name/structname.

+ # Default: []

+ exclude:

+ # std libs

+ - "^net/http.Client$"

+ - "^net/http.Cookie$"

+ - "^net/http.Request$"

+ - "^net/http.Response$"

+ - "^net/http.Server$"

+ - "^net/http.Transport$"

+ - "^net/url.URL$"

+ - "^os/exec.Cmd$"

+ - "^reflect.StructField$"

+ # public libs

+ - "^github.com/Shopify/sarama.Config$"

+ - "^github.com/Shopify/sarama.ProducerMessage$"

+ - "^github.com/mitchellh/mapstructure.DecoderConfig$"

+ - "^github.com/prometheus/client_golang/.+Opts$"

+ - "^github.com/spf13/cobra.Command$"

+ - "^github.com/spf13/cobra.CompletionOptions$"

+ - "^github.com/stretchr/testify/mock.Mock$"

+ - "^github.com/testcontainers/testcontainers-go.+Request$"

+ - "^github.com/testcontainers/testcontainers-go.FromDockerfile$"

+ - "^golang.org/x/tools/go/analysis.Analyzer$"

+ - "^google.golang.org/protobuf/.+Options$"

+ - "^gopkg.in/yaml.v3.Node$"

+

+ funlen:

+ # Checks the number of lines in a function.

+ # If lower than 0, disable the check.

+ # Default: 60

+ lines: 100

+ # Checks the number of statements in a function.

+ # If lower than 0, disable the check.

+ # Default: 40

+ statements: 50

+ # Ignore comments when counting lines.

+ # Default false

+ ignore-comments: true

+

+ gocognit:

+ # Minimal code complexity to report.

+ # Default: 30 (but we recommend 10-20)

+ min-complexity: 20

+

+ gocritic:

+ # Settings passed to gocritic.

+ # The settings key is the name of a supported gocritic checker.

+ # The list of supported checkers can be find in https://go-critic.github.io/overview.

+ settings:

+ captLocal:

+ # Whether to restrict checker to params only.

+ # Default: true

+ paramsOnly: false

+ underef:

+ # Whether to skip (*x).method() calls where x is a pointer receiver.

+ # Default: true

+ skipRecvDeref: false

+

+ gomnd:

+ # List of function patterns to exclude from analysis.

+ # Values always ignored: `time.Date`,

+ # `strconv.FormatInt`, `strconv.FormatUint`, `strconv.FormatFloat`,

+ # `strconv.ParseInt`, `strconv.ParseUint`, `strconv.ParseFloat`.

+ # Default: []

+ ignored-functions:

+ - flag.Arg

+ - flag.Duration.*

+ - flag.Float.*

+ - flag.Int.*

+ - flag.Uint.*

+ - os.Chmod

+ - os.Mkdir.*

+ - os.OpenFile

+ - os.WriteFile

+ - prometheus.ExponentialBuckets.*

+ - prometheus.LinearBuckets

+

+ gomodguard:

+ blocked:

+ # List of blocked modules.

+ # Default: []

+ modules:

+ - github.com/golang/protobuf:

+ recommendations:

+ - google.golang.org/protobuf

+ reason: "see https://developers.google.com/protocol-buffers/docs/reference/go/faq#modules"

+ - github.com/satori/go.uuid:

+ recommendations:

+ - github.com/google/uuid

+ reason: "satori's package is not maintained"

+ - github.com/gofrs/uuid:

+ recommendations:

+ - github.com/gofrs/uuid/v5

+ reason: "gofrs' package was not go module before v5"

+

+ govet:

+ # Enable all analyzers.

+ # Default: false

+ enable-all: true

+ # Disable analyzers by name.

+ # Run `go tool vet help` to see all analyzers.

+ # Default: []

+ disable:

+ - fieldalignment # too strict

+ # Settings per analyzer.

+ settings:

+ shadow:

+ # Whether to be strict about shadowing; can be noisy.

+ # Default: false

+ #strict: true

+

+ inamedparam:

+ # Skips check for interface methods with only a single parameter.

+ # Default: false

+ skip-single-param: true

+

+ nakedret:

+ # Make an issue if func has more lines of code than this setting, and it has naked returns.

+ # Default: 30

+ max-func-lines: 0

+

+ nolintlint:

+ # Exclude following linters from requiring an explanation.

+ # Default: []

+ allow-no-explanation: [ funlen, gocognit, lll ]

+ # Enable to require an explanation of nonzero length after each nolint directive.

+ # Default: false

+ require-explanation: true

+ # Enable to require nolint directives to mention the specific linter being suppressed.

+ # Default: false

+ require-specific: true

+

+ perfsprint:

+ # Optimizes into strings concatenation.

+ # Default: true

+ strconcat: false

+

+ rowserrcheck:

+ # database/sql is always checked

+ # Default: []

+ packages:

+ - github.com/jmoiron/sqlx

+

+ tenv:

+ # The option `all` will run against whole test files (`_test.go`) regardless of method/function signatures.

+ # Otherwise, only methods that take `*testing.T`, `*testing.B`, and `testing.TB` as arguments are checked.

+ # Default: false

+ all: true

+

+ stylecheck:

+ # STxxxx checks in https://staticcheck.io/docs/configuration/options/#checks

+ # Default: ["*"]

+ checks: ["all", "-ST1003"]

+

+ revive:

+ rules:

+ # https://github.com/mgechev/revive/blob/master/RULES_DESCRIPTIONS.md#unused-parameter

+ - name: unused-parameter

+ disabled: true

+

+linters:

+ disable-all: true

+ enable:

+ ## enabled by default

+ #- errcheck # checking for unchecked errors, these unchecked errors can be critical bugs in some cases

+ - gosimple # specializes in simplifying a code

+ - govet # reports suspicious constructs, such as Printf calls whose arguments do not align with the format string

+ - ineffassign # detects when assignments to existing variables are not used

+ - staticcheck # is a go vet on steroids, applying a ton of static analysis checks

+ - typecheck # like the front-end of a Go compiler, parses and type-checks Go code

+ - unused # checks for unused constants, variables, functions and types

+ ## disabled by default

+ - asasalint # checks for pass []any as any in variadic func(...any)

+ - asciicheck # checks that your code does not contain non-ASCII identifiers

+ - bidichk # checks for dangerous unicode character sequences

+ - bodyclose # checks whether HTTP response body is closed successfully

+ - cyclop # checks function and package cyclomatic complexity

+ - dupl # tool for code clone detection

+ - durationcheck # checks for two durations multiplied together

+ - errname # checks that sentinel errors are prefixed with the Err and error types are suffixed with the Error

+ #- errorlint # finds code that will cause problems with the error wrapping scheme introduced in Go 1.13

+ - execinquery # checks query string in Query function which reads your Go src files and warning it finds

+ - exhaustive # checks exhaustiveness of enum switch statements

+ - exportloopref # checks for pointers to enclosing loop variables

+ #- forbidigo # forbids identifiers

+ - funlen # tool for detection of long functions

+ - gocheckcompilerdirectives # validates go compiler directive comments (//go:)

+ #- gochecknoglobals # checks that no global variables exist

+ - gochecknoinits # checks that no init functions are present in Go code

+ - gochecksumtype # checks exhaustiveness on Go "sum types"

+ #- gocognit # computes and checks the cognitive complexity of functions

+ #- goconst # finds repeated strings that could be replaced by a constant

+ #- gocritic # provides diagnostics that check for bugs, performance and style issues

+ - gocyclo # computes and checks the cyclomatic complexity of functions

+ - godot # checks if comments end in a period

+ - goimports # in addition to fixing imports, goimports also formats your code in the same style as gofmt

+ #- gomnd # detects magic numbers

+ - gomoddirectives # manages the use of 'replace', 'retract', and 'excludes' directives in go.mod

+ - gomodguard # allow and block lists linter for direct Go module dependencies. This is different from depguard where there are different block types for example version constraints and module recommendations

+ - goprintffuncname # checks that printf-like functions are named with f at the end

+ - gosec # inspects source code for security problems

+ #- lll # reports long lines

+ - loggercheck # checks key value pairs for common logger libraries (kitlog,klog,logr,zap)

+ - makezero # finds slice declarations with non-zero initial length

+ - mirror # reports wrong mirror patterns of bytes/strings usage

+ - musttag # enforces field tags in (un)marshaled structs

+ - nakedret # finds naked returns in functions greater than a specified function length

+ - nestif # reports deeply nested if statements

+ - nilerr # finds the code that returns nil even if it checks that the error is not nil

+ #- nilnil # checks that there is no simultaneous return of nil error and an invalid value

+ - noctx # finds sending http request without context.Context

+ - nolintlint # reports ill-formed or insufficient nolint directives

+ #- nonamedreturns # reports all named returns

+ - nosprintfhostport # checks for misuse of Sprintf to construct a host with port in a URL

+ #- perfsprint # checks that fmt.Sprintf can be replaced with a faster alternative

+ - predeclared # finds code that shadows one of Go's predeclared identifiers

+ - promlinter # checks Prometheus metrics naming via promlint

+ - protogetter # reports direct reads from proto message fields when getters should be used

+ - reassign # checks that package variables are not reassigned

+ - revive # fast, configurable, extensible, flexible, and beautiful linter for Go, drop-in replacement of golint

+ - rowserrcheck # checks whether Err of rows is checked successfully

+ - sloglint # ensure consistent code style when using log/slog

+ - spancheck # checks for mistakes with OpenTelemetry/Census spans

+ - sqlclosecheck # checks that sql.Rows and sql.Stmt are closed

+ - stylecheck # is a replacement for golint

+ - tenv # detects using os.Setenv instead of t.Setenv since Go1.17

+ - testableexamples # checks if examples are testable (have an expected output)

+ - testifylint # checks usage of github.com/stretchr/testify

+ #- testpackage # makes you use a separate _test package

+ - tparallel # detects inappropriate usage of t.Parallel() method in your Go test codes

+ - unconvert # removes unnecessary type conversions

+ #- unparam # reports unused function parameters

+ - usestdlibvars # detects the possibility to use variables/constants from the Go standard library

+ - wastedassign # finds wasted assignment statements

+ - whitespace # detects leading and trailing whitespace

+

+ ## you may want to enable

+ #- decorder # checks declaration order and count of types, constants, variables and functions

+ #- exhaustruct # [highly recommend to enable] checks if all structure fields are initialized

+ #- gci # controls golang package import order and makes it always deterministic

+ #- ginkgolinter # [if you use ginkgo/gomega] enforces standards of using ginkgo and gomega

+ #- godox # detects FIXME, TODO and other comment keywords

+ #- goheader # checks is file header matches to pattern

+ #- inamedparam # [great idea, but too strict, need to ignore a lot of cases by default] reports interfaces with unnamed method parameters

+ #- interfacebloat # checks the number of methods inside an interface

+ #- ireturn # accept interfaces, return concrete types

+ #- prealloc # [premature optimization, but can be used in some cases] finds slice declarations that could potentially be preallocated

+ #- tagalign # checks that struct tags are well aligned

+ #- varnamelen # [great idea, but too many false positives] checks that the length of a variable's name matches its scope

+ #- wrapcheck # checks that errors returned from external packages are wrapped

+ #- zerologlint # detects the wrong usage of zerolog that a user forgets to dispatch zerolog.Event

+

+ ## disabled

+ #- containedctx # detects struct contained context.Context field

+ #- contextcheck # [too many false positives] checks the function whether use a non-inherited context

+ #- depguard # [replaced by gomodguard] checks if package imports are in a list of acceptable packages

+ #- dogsled # checks assignments with too many blank identifiers (e.g. x, _, _, _, := f())

+ #- dupword # [useless without config] checks for duplicate words in the source code

+ #- errchkjson # [don't see profit + I'm against of omitting errors like in the first example https://github.com/breml/errchkjson] checks types passed to the json encoding functions. Reports unsupported types and optionally reports occasions, where the check for the returned error can be omitted

+ #- forcetypeassert # [replaced by errcheck] finds forced type assertions

+ #- goerr113 # [too strict] checks the errors handling expressions

+ #- gofmt # [replaced by goimports] checks whether code was gofmt-ed

+ #- gofumpt # [replaced by goimports, gofumports is not available yet] checks whether code was gofumpt-ed

+ #- gosmopolitan # reports certain i18n/l10n anti-patterns in your Go codebase

+ #- grouper # analyzes expression groups

+ #- importas # enforces consistent import aliases

+ #- maintidx # measures the maintainability index of each function

+ #- misspell # [useless] finds commonly misspelled English words in comments

+ #- nlreturn # [too strict and mostly code is not more readable] checks for a new line before return and branch statements to increase code clarity

+ #- paralleltest # [too many false positives] detects missing usage of t.Parallel() method in your Go test

+ #- tagliatelle # checks the struct tags

+ #- thelper # detects golang test helpers without t.Helper() call and checks the consistency of test helpers

+ #- wsl # [too strict and mostly code is not more readable] whitespace linter forces you to use empty lines

+

+ ## deprecated

+ #- deadcode # [deprecated, replaced by unused] finds unused code

+ #- exhaustivestruct # [deprecated, replaced by exhaustruct] checks if all struct's fields are initialized

+ #- golint # [deprecated, replaced by revive] golint differs from gofmt. Gofmt reformats Go source code, whereas golint prints out style mistakes

+ #- ifshort # [deprecated] checks that your code uses short syntax for if-statements whenever possible

+ #- interfacer # [deprecated] suggests narrower interface types

+ #- maligned # [deprecated, replaced by govet fieldalignment] detects Go structs that would take less memory if their fields were sorted

+ #- nosnakecase # [deprecated, replaced by revive var-naming] detects snake case of variable naming and function name

+ #- scopelint # [deprecated, replaced by exportloopref] checks for unpinned variables in go programs

+ #- structcheck # [deprecated, replaced by unused] finds unused struct fields

+ #- varcheck # [deprecated, replaced by unused] finds unused global variables and constants

+

+

+issues:

+ # Maximum count of issues with the same text.

+ # Set to 0 to disable.

+ # Default: 3

+ max-same-issues: 50

+

+ exclude-rules:

+ - source: "(noinspection|TODO)"

+ linters: [ godot ]

+ - source: "//noinspection"

+ linters: [ gocritic ]

+ - path: "_test\\.go"

+ linters:

+ - bodyclose

+ - dupl

+ - funlen

+ - goconst

+ - gosec

+ - noctx

+ - wrapcheck

+ # TODO: remove after PR is released https://github.com/golangci/golangci-lint/pull/4386

+ - text: "fmt.Sprintf can be replaced with string addition"

+ linters: [ perfsprint ]

\ No newline at end of file

diff --git a/vendor/github.com/casbin/casbin/v2/.releaserc.json b/vendor/github.com/casbin/casbin/v2/.releaserc.json

new file mode 100644

index 0000000000..58cb0bb4ca

--- /dev/null

+++ b/vendor/github.com/casbin/casbin/v2/.releaserc.json

@@ -0,0 +1,16 @@

+{

+ "debug": true,

+ "branches": [

+ "+([0-9])?(.{+([0-9]),x}).x",

+ "master",

+ {

+ "name": "beta",

+ "prerelease": true

+ }

+ ],

+ "plugins": [

+ "@semantic-release/commit-analyzer",

+ "@semantic-release/release-notes-generator",

+ "@semantic-release/github"

+ ]

+}

diff --git a/vendor/github.com/casbin/casbin/v2/.travis.yml b/vendor/github.com/casbin/casbin/v2/.travis.yml

new file mode 100644

index 0000000000..cea21652e0

--- /dev/null

+++ b/vendor/github.com/casbin/casbin/v2/.travis.yml

@@ -0,0 +1,15 @@

+language: go

+

+sudo: false

+

+env:

+ - GO111MODULE=on

+

+go:

+ - "1.11.13"

+ - "1.12"

+ - "1.13"

+ - "1.14"

+

+script:

+ - make test

diff --git a/vendor/github.com/casbin/casbin/v2/CONTRIBUTING.md b/vendor/github.com/casbin/casbin/v2/CONTRIBUTING.md

new file mode 100644

index 0000000000..4bab59c93f

--- /dev/null

+++ b/vendor/github.com/casbin/casbin/v2/CONTRIBUTING.md

@@ -0,0 +1,35 @@

+# How to contribute

+

+The following is a set of guidelines for contributing to casbin and its libraries, which are hosted at [casbin organization at Github](https://github.com/casbin).

+

+This project adheres to the [Contributor Covenant 1.2.](https://www.contributor-covenant.org/version/1/2/0/code-of-conduct.html) By participating, you are expected to uphold this code. Please report unacceptable behavior to info@casbin.com.

+

+## Questions

+

+- We do our best to have an [up-to-date documentation](https://casbin.org/docs/overview)

+- [Stack Overflow](https://stackoverflow.com) is the best place to start if you have a question. Please use the [casbin tag](https://stackoverflow.com/tags/casbin/info) we are actively monitoring. We encourage you to use Stack Overflow specially for Modeling Access Control Problems, in order to build a shared knowledge base.

+- You can also join our [Discord](https://discord.gg/S5UjpzGZjN).

+

+## Reporting issues

+

+Reporting issues are a great way to contribute to the project. We are perpetually grateful about a well-written, through bug report.

+

+Before raising a new issue, check our [issue list](https://github.com/casbin/casbin/issues) to determine if it already contains the problem that you are facing.

+

+A good bug report shouldn't leave others needing to chase you for more information. Please be as detailed as possible. The following questions might serve as a template for writing a detailed report:

+

+What were you trying to achieve?

+What are the expected results?

+What are the received results?

+What are the steps to reproduce the issue?

+In what environment did you encounter the issue?

+

+Feature requests can also be submitted as issues.

+

+## Pull requests

+

+Good pull requests (e.g. patches, improvements, new features) are a fantastic help. They should remain focused in scope and avoid unrelated commits.

+

+Please ask first before embarking on any significant pull request (e.g. implementing new features, refactoring code etc.), otherwise you risk spending a lot of time working on something that the maintainers might not want to merge into the project.

+

+First add an issue to the project to discuss the improvement. Please adhere to the coding conventions used throughout the project. If in doubt, consult the [Effective Go style guide](https://golang.org/doc/effective_go.html).

diff --git a/vendor/github.com/casbin/casbin/v2/LICENSE b/vendor/github.com/casbin/casbin/v2/LICENSE

new file mode 100644

index 0000000000..8dada3edaf

--- /dev/null

+++ b/vendor/github.com/casbin/casbin/v2/LICENSE

@@ -0,0 +1,201 @@

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "{}"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright {yyyy} {name of copyright owner}

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/vendor/github.com/casbin/casbin/v2/Makefile b/vendor/github.com/casbin/casbin/v2/Makefile

new file mode 100644

index 0000000000..6db2b92071

--- /dev/null

+++ b/vendor/github.com/casbin/casbin/v2/Makefile

@@ -0,0 +1,18 @@

+SHELL = /bin/bash

+export PATH := $(shell yarn global bin):$(PATH)

+

+default: lint test

+

+test:

+ go test -race -v ./...

+

+benchmark:

+ go test -bench=.

+

+lint:

+ golangci-lint run --verbose

+

+release:

+ yarn global add semantic-release@17.2.4

+ semantic-release

+

diff --git a/vendor/github.com/casbin/casbin/v2/README.md b/vendor/github.com/casbin/casbin/v2/README.md

new file mode 100644

index 0000000000..36549f55f9

--- /dev/null

+++ b/vendor/github.com/casbin/casbin/v2/README.md

@@ -0,0 +1,296 @@

+Casbin

+====

+

+[](https://goreportcard.com/report/github.com/casbin/casbin)

+[](https://github.com/casbin/casbin/actions/workflows/default.yml)

+[](https://coveralls.io/github/casbin/casbin?branch=master)

+[](https://pkg.go.dev/github.com/casbin/casbin/v2)

+[](https://github.com/casbin/casbin/releases/latest)

+[](https://discord.gg/S5UjpzGZjN)

+[](https://sourcegraph.com/github.com/casbin/casbin?badge)

+

+**News**: still worry about how to write the correct Casbin policy? ``Casbin online editor`` is coming to help! Try it at: https://casbin.org/editor/

+

+

+

+Casbin is a powerful and efficient open-source access control library for Golang projects. It provides support for enforcing authorization based on various [access control models](https://en.wikipedia.org/wiki/Computer_security_model).

+

+

+ Sponsored by

+

+

+

+

+

+  +

+

+

+

+ Build auth with fraud prevention, faster.

Try Stytch for API-first authentication, user & org management, multi-tenant SSO, MFA, device fingerprinting, and more.

+

+

+

+## All the languages supported by Casbin:

+

+| [](https://github.com/casbin/casbin) | [](https://github.com/casbin/jcasbin) | [](https://github.com/casbin/node-casbin) | [](https://github.com/php-casbin/php-casbin) |

+|----------------------------------------------------------------------------------------|-------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------|

+| [Casbin](https://github.com/casbin/casbin) | [jCasbin](https://github.com/casbin/jcasbin) | [node-Casbin](https://github.com/casbin/node-casbin) | [PHP-Casbin](https://github.com/php-casbin/php-casbin) |

+| production-ready | production-ready | production-ready | production-ready |

+

+| [](https://github.com/casbin/pycasbin) | [](https://github.com/casbin-net/Casbin.NET) | [](https://github.com/casbin/casbin-cpp) | [](https://github.com/casbin/casbin-rs) |

+|------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------|

+| [PyCasbin](https://github.com/casbin/pycasbin) | [Casbin.NET](https://github.com/casbin-net/Casbin.NET) | [Casbin-CPP](https://github.com/casbin/casbin-cpp) | [Casbin-RS](https://github.com/casbin/casbin-rs) |

+| production-ready | production-ready | production-ready | production-ready |

+

+## Table of contents

+

+- [Supported models](#supported-models)

+- [How it works?](#how-it-works)

+- [Features](#features)

+- [Installation](#installation)

+- [Documentation](#documentation)

+- [Online editor](#online-editor)

+- [Tutorials](#tutorials)

+- [Get started](#get-started)

+- [Policy management](#policy-management)

+- [Policy persistence](#policy-persistence)

+- [Policy consistence between multiple nodes](#policy-consistence-between-multiple-nodes)

+- [Role manager](#role-manager)

+- [Benchmarks](#benchmarks)

+- [Examples](#examples)

+- [Middlewares](#middlewares)

+- [Our adopters](#our-adopters)

+

+## Supported models

+

+1. [**ACL (Access Control List)**](https://en.wikipedia.org/wiki/Access_control_list)

+2. **ACL with [superuser](https://en.wikipedia.org/wiki/Superuser)**

+3. **ACL without users**: especially useful for systems that don't have authentication or user log-ins.

+3. **ACL without resources**: some scenarios may target for a type of resources instead of an individual resource by using permissions like ``write-article``, ``read-log``. It doesn't control the access to a specific article or log.

+4. **[RBAC (Role-Based Access Control)](https://en.wikipedia.org/wiki/Role-based_access_control)**

+5. **RBAC with resource roles**: both users and resources can have roles (or groups) at the same time.

+6. **RBAC with domains/tenants**: users can have different role sets for different domains/tenants.

+7. **[ABAC (Attribute-Based Access Control)](https://en.wikipedia.org/wiki/Attribute-Based_Access_Control)**: syntax sugar like ``resource.Owner`` can be used to get the attribute for a resource.

+8. **[RESTful](https://en.wikipedia.org/wiki/Representational_state_transfer)**: supports paths like ``/res/*``, ``/res/:id`` and HTTP methods like ``GET``, ``POST``, ``PUT``, ``DELETE``.

+9. **Deny-override**: both allow and deny authorizations are supported, deny overrides the allow.

+10. **Priority**: the policy rules can be prioritized like firewall rules.

+

+## How it works?

+

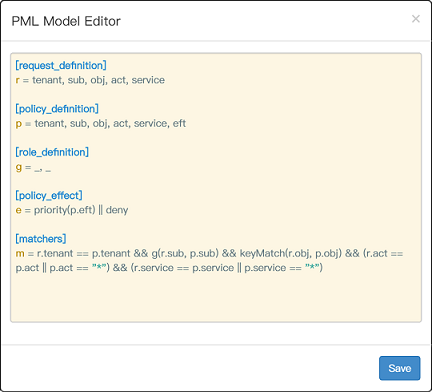

+In Casbin, an access control model is abstracted into a CONF file based on the **PERM metamodel (Policy, Effect, Request, Matchers)**. So switching or upgrading the authorization mechanism for a project is just as simple as modifying a configuration. You can customize your own access control model by combining the available models. For example, you can get RBAC roles and ABAC attributes together inside one model and share one set of policy rules.

+

+The most basic and simplest model in Casbin is ACL. ACL's model CONF is:

+

+```ini

+# Request definition

+[request_definition]

+r = sub, obj, act

+

+# Policy definition

+[policy_definition]

+p = sub, obj, act

+

+# Policy effect

+[policy_effect]

+e = some(where (p.eft == allow))

+

+# Matchers

+[matchers]

+m = r.sub == p.sub && r.obj == p.obj && r.act == p.act

+

+```

+

+An example policy for ACL model is like:

+

+```

+p, alice, data1, read

+p, bob, data2, write

+```

+

+It means:

+

+- alice can read data1

+- bob can write data2

+

+We also support multi-line mode by appending '\\' in the end:

+

+```ini

+# Matchers

+[matchers]

+m = r.sub == p.sub && r.obj == p.obj \

+ && r.act == p.act

+```

+

+Further more, if you are using ABAC, you can try operator `in` like following in Casbin **golang** edition (jCasbin and Node-Casbin are not supported yet):

+

+```ini

+# Matchers

+[matchers]

+m = r.obj == p.obj && r.act == p.act || r.obj in ('data2', 'data3')

+```

+

+But you **SHOULD** make sure that the length of the array is **MORE** than **1**, otherwise there will cause it to panic.

+

+For more operators, you may take a look at [govaluate](https://github.com/casbin/govaluate)

+

+## Features

+

+What Casbin does:

+

+1. enforce the policy in the classic ``{subject, object, action}`` form or a customized form as you defined, both allow and deny authorizations are supported.

+2. handle the storage of the access control model and its policy.

+3. manage the role-user mappings and role-role mappings (aka role hierarchy in RBAC).

+4. support built-in superuser like ``root`` or ``administrator``. A superuser can do anything without explicit permissions.

+5. multiple built-in operators to support the rule matching. For example, ``keyMatch`` can map a resource key ``/foo/bar`` to the pattern ``/foo*``.

+

+What Casbin does NOT do:

+

+1. authentication (aka verify ``username`` and ``password`` when a user logs in)

+2. manage the list of users or roles. I believe it's more convenient for the project itself to manage these entities. Users usually have their passwords, and Casbin is not designed as a password container. However, Casbin stores the user-role mapping for the RBAC scenario.

+

+## Installation

+

+```

+go get github.com/casbin/casbin/v2

+```

+

+## Documentation

+

+https://casbin.org/docs/overview

+

+## Online editor

+

+You can also use the online editor (https://casbin.org/editor/) to write your Casbin model and policy in your web browser. It provides functionality such as ``syntax highlighting`` and ``code completion``, just like an IDE for a programming language.

+

+## Tutorials

+

+https://casbin.org/docs/tutorials

+

+## Get started

+

+1. New a Casbin enforcer with a model file and a policy file:

+

+ ```go

+ e, _ := casbin.NewEnforcer("path/to/model.conf", "path/to/policy.csv")

+ ```

+

+Note: you can also initialize an enforcer with policy in DB instead of file, see [Policy-persistence](#policy-persistence) section for details.

+

+2. Add an enforcement hook into your code right before the access happens:

+

+ ```go

+ sub := "alice" // the user that wants to access a resource.

+ obj := "data1" // the resource that is going to be accessed.

+ act := "read" // the operation that the user performs on the resource.

+

+ if res, _ := e.Enforce(sub, obj, act); res {

+ // permit alice to read data1

+ } else {

+ // deny the request, show an error

+ }

+ ```

+

+3. Besides the static policy file, Casbin also provides API for permission management at run-time. For example, You can get all the roles assigned to a user as below:

+

+ ```go

+ roles, _ := e.GetImplicitRolesForUser(sub)

+ ```

+

+See [Policy management APIs](#policy-management) for more usage.

+