v4.56: Dino v3, X-Codec, Ovis 2, MetaCLIP 2, Florence 2, SAM 2, Kosmos 2.5, HunYuan, GLMV-4.5

New model additions

Dino v3

DINOv3 is a family of versatile vision foundation models that outperforms the specialized state of the art across a broad range of settings, without fine-tuning. DINOv3 produces high-quality dense features that achieve outstanding performance on various vision tasks, significantly surpassing previous self- and weakly-supervised foundation models.

You can find all the original DINOv3 checkpoints under the DINOv3 collection.

X-Codec

he X-Codec model was proposed in Codec Does Matter: Exploring the Semantic Shortcoming of Codec for Audio Language Model by Zhen Ye, Peiwen Sun, Jiahe Lei, Hongzhan Lin, Xu Tan, Zheqi Dai, Qiuqiang Kong, Jianyi Chen, Jiahao Pan, Qifeng Liu, Yike Guo, Wei Xue

The X-Codec model is a neural audio codec that integrates semantic information from self-supervised models (e.g., HuBERT) alongside traditional acoustic information. This enables :

- Music continuation : Better modeling of musical semantics yields more coherent continuations.

- Text-to-Sound Synthesis : X-Codec captures semantic alignment between text prompts and generated audio.

- Semantic aware audio tokenization: X-Codec is used as an audio tokenizer in the YuE lyrics to song generation model.

- Add X-Codec model by @Manalelaidouni in #38248

Ovis 2

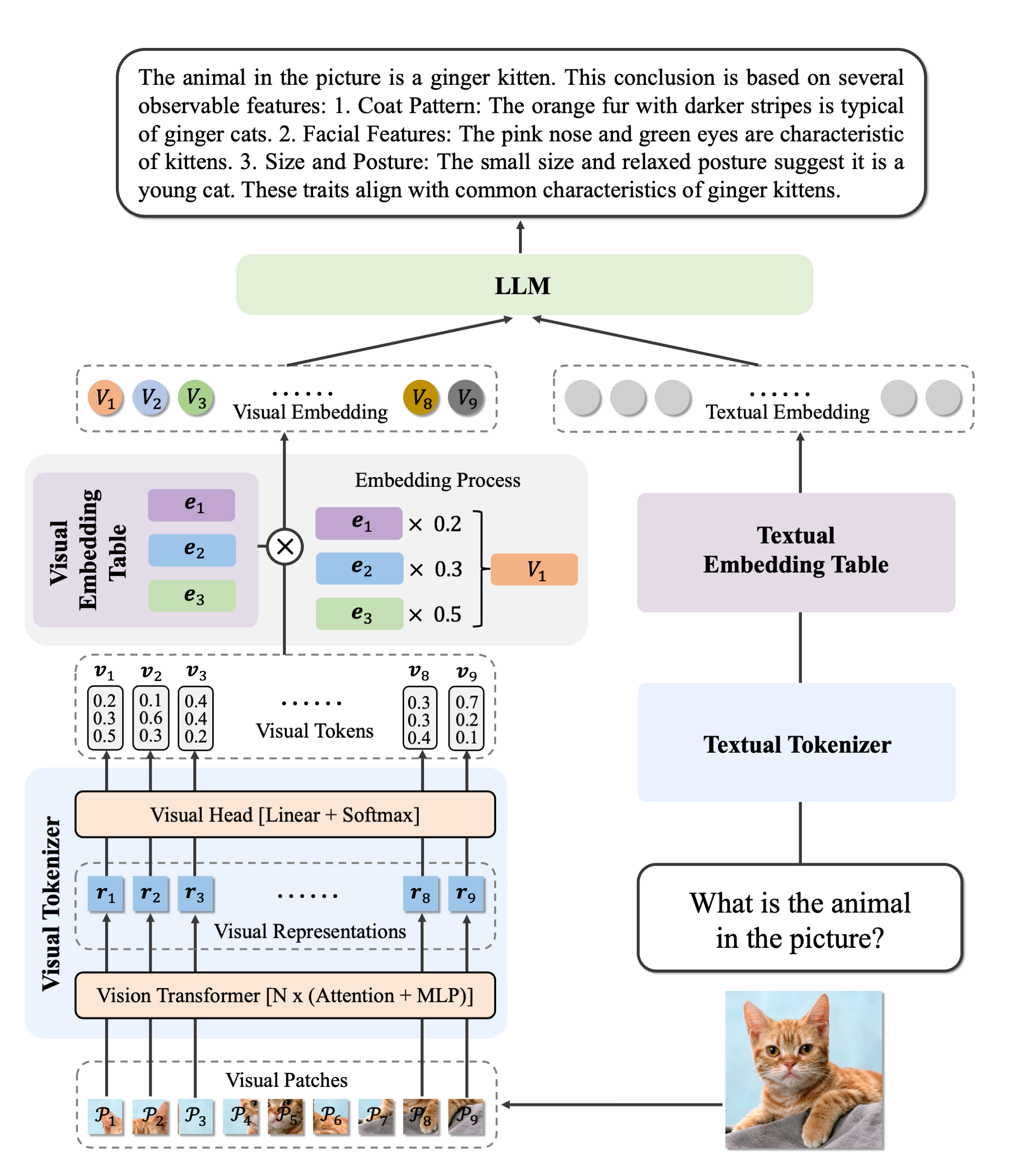

The Ovis2 is an updated version of the Ovis model developed by the AIDC-AI team at Alibaba International Digital Commerce Group.

Ovis2 is the latest advancement in multi-modal large language models (MLLMs), succeeding Ovis1.6. It retains the architectural design of the Ovis series, which focuses on aligning visual and textual embeddings, and introduces major improvements in data curation and training methods.

- Add Ovis2 model and processor implementation by @thisisiron in #37088

MetaCLIP 2

MetaCLIP 2 is a replication of the original CLIP model trained on 300+ languages. It achieves state-of-the-art (SOTA) results on multilingual benchmarks (e.g., XM3600, CVQA, Babel‑ImageNet), surpassing previous SOTA such as mSigLIP and SigLIP‑2. The authors show that English and non-English worlds can mutually benefit and elevate each other.

- Add MetaCLIP 2 by @NielsRogge in #39826

Florence 2

Florence-2 is an advanced vision foundation model that uses a prompt-based approach to handle a wide range of vision and vision-language tasks. Florence-2 can interpret simple text prompts to perform tasks like captioning, object detection, and segmentation. It leverages the FLD-5B dataset, containing 5.4 billion annotations across 126 million images, to master multi-task learning. The model's sequence-to-sequence architecture enables it to excel in both zero-shot and fine-tuned settings, proving to be a competitive vision foundation model.

- Add support for Florence-2 by @ducviet00 in #38188

SAM 2

SAM2 (Segment Anything Model 2) was proposed in Segment Anything in Images and Videos by Nikhila Ravi, Valentin Gabeur, Yuan-Ting Hu, Ronghang Hu, Chaitanya Ryali, Tengyu Ma, Haitham Khedr, Roman Rädle, Chloe Rolland, Laura Gustafson, Eric Mintun, Junting Pan, Kalyan Vasudev Alwala, Nicolas Carion, Chao-Yuan Wu, Ross Girshick, Piotr Dollár, Christoph Feichtenhofer.

The model can be used to predict segmentation masks of any object of interest given an input image or video, and input points or bounding boxes.

- Add Segment Anything 2 (SAM2) by @SangbumChoi in #32317

Kosmos 2.5

The Kosmos-2.5 model was proposed in KOSMOS-2.5: A Multimodal Literate Model by Microsoft.

The abstract from the paper is the following:

We present Kosmos-2.5, a multimodal literate model for machine reading of text-intensive images. Pre-trained on large-scale text-intensive images, Kosmos-2.5 excels in two distinct yet cooperative transcription tasks: (1) generating spatially-aware text blocks, where each block of text is assigned its spatial coordinates within the image, and (2) producing structured text output that captures styles and structures into the markdown format. This unified multimodal literate capability is achieved through a shared Transformer architecture, task-specific prompts, and flexible text representations. We evaluate Kosmos-2.5 on end-to-end document-level text recognition and image-to-markdown text generation. Furthermore, the model can be readily adapted for any text-intensive image understanding task with different prompts through supervised fine-tuning, making it a general-purpose tool for real-world applications involving text-rich images. This work also paves the way for the future scaling of multimodal large language models.

HunYuan

More information at release 🤗

Seed OSS

More information at release 🤗

- Adding ByteDance Seed Seed-OSS by @Fazziekey in #40272

GLM-4.5V

More information at release 🤗

- GLM-4.5V Model Support by @zRzRzRzRzRzRzR in #39805

Cache

Beyond a large refactor of the caching system in Transformers, making it much more practical and general, models using sliding window attention/chunk attention do not waste memory anymore when caching past states. It was allowed most notable by:

- New DynamicSlidingWindowLayer & associated Cache by @Cyrilvallez in #40039

See the following improvements on memory usage for Mistral (using only sliding layers) and GPT-OSS (1 out of 2 layers is sliding) respectively:

Beyond memory usage, it will also improve generation/forward speed by a large margin for large contexts, as only necessary states are passed to the attention computation, which is very sensitive to the sequence length.

Quantization

MXFP4

Since the GPT-OSS release which introduced the MXPF4 quantization type, several improvements have been made to the support, which should now stabilize.

- Fix MXFP4 quantizer validation to allow CPU inference with dequantize option by @returnL in #39953

- Enable gpt-oss mxfp4 on older hardware (sm75+) by @matthewdouglas in #39940

- Fix typo and improve GPU kernel check error message in MXFP4 quantization by @akintunero in #40349)

- Default to dequantize if cpu in device_map for mxfp4 by @MekkCyber in #39993

- Fix GPT-OSS

swiglu_limitnot passed in for MXFP4 by @danielhanchen in #40197 - [

Mxfp4] Add a way to save with a quantization method by @ArthurZucker in #40176

New standard

Now that we deprecated tensorflow and jax, we felt that torch_dtype was not only misaligned with torch, but was redundant and hard to remember. For this reason, we switched to a much more standard dtype argument!

⚠️ ⚠️ Use dtype instead of torch_dtype everywhere! by @Cyrilvallez in #39782

torch_dtype will still be a valid usage for as long as needed to ensure a smooth transition, but new code should use dtype, and we encourage you to update older code as well!

Breaking changes

The following commits are breaking changes in workflows that were either buggy or not working as expected.

Saner hub-defaults for hybrid cache implementation

On models where the hub checkpoint specifies cache_implementation="hybrid" (static sliding window hybrid cache), UNSETS this value. This will make the model use the dynamic sliding window layers by default.

This default meant that there were widespread super slow 1st generate calls on models with hybrid caches, which should nol onger be the case.

Sine positional embeddings for MaskFormer & LRU cache

Cache the computation of sine positional embeddings for MaskFormer; results in a 6% performance improvement.

- 🚨 Use lru_cache for sine pos embeddings MaskFormer by @yonigozlan in #40007

Explicit cache initialization

Adds explicit cache initialization to prepare for the deprecation of the from_legacy_cache utility.

- 🚨 Always return Cache objects in modelings (to align with generate) by @manueldeprada in #39765

Default compilation with fullgraph=False

Having fullgraph set to True during compilation ended up being very restrictive, especially with the arrival of widely-used MoEs.

- 🚨🚨 Switch default compilation to fullgraph=False by @Cyrilvallez in #40137

Remove decoding strategies

The DoLa decoding strategy has been moved to the following remote-code repository a few versions ago: https://huggingface.co/transformers-community/dola

The Contrastive Search decoding strategy has been moved to the following remote-code repository a few versions ago: https://huggingface.co/transformers-community/contrastive-search

Both have now been removed from the library as a result.

- 🚨 Remove DoLa decoding strategy by @manueldeprada in #40082

- 🚨 Remove Contrastive Search decoding strategy by @manueldeprada in #40428

Fix sliding window in flash attention

Flash attention has used sliding window sizes which were off by one. This affected generations that had initially bigger contexts than the sliding window size.

Minimum Torch version is now 2.2

Torch 2.1 support has been unreliable for some time, so we've now made it official and bumped our minimum version to 2.2.

- byebye torch 2.1 by @Rocketknight1 in #40317

Bugfixes and improvements

- [CI] post-

GptOssfixes for green CI by @gante in #39929 - Avoid

utils/check_bad_commit.pyfailing due to rate limit (requestingapi.github.com) by @ydshieh in #39918 - Fix CI: Tests failing on CPU due to

torch.device('cpu').indexbeing None by @manueldeprada in #39933 - circleci: pin torch 2.7.1 until

torchcodecis updated by @ydshieh in #39951 - [docs] ko toc fix by @gante in #39927

- docs: fix typo in 'quantization-aware training' by @luckyvickyricky in #39904

- Fix grammatical error in MoE variable name: expert_hitted → expert_hit, hitted_experts → hit_experts by @Mihonarium in #39959

- fix typo by @Tialo in #39936

- [image processor] fix glm4v by @KeyKy in #39964

- remove

triton_kernelsdep withkernelsinstead by @SunMarc in #39926 - Fix

fix_and_overwritemode ofutils/check_docstring.pyby @manueldeprada in #39369 - [bugfix] fix flash_attention_2 unavailable error on Ascend NPU by @FightingZhen in #39844

- chore: update Deformable_Detr model card by @arpon-kapuria in #39902

- Modular fix: remove the model name in

find_file_typeby @yonigozlan in #39897 - Gemma3 fixes by @remi-or in #39960

- [superglue] Fixed the way batch mask was applied to the scores before match assignment computation by @sbucaille in #39968

- Support input_embeds in torch exportable decoders by @jackzhxng in #39836

- Various test fixes for AMD by @remi-or in #39978

- [Idefics] fix device mismatch by @zucchini-nlp in #39981

- Fix gemma3n feature extractor's incorrect squeeze by @Isotr0py in #39919

- [typing] Fix return typehint for decoder and inv_freq annotation by @qubvel in #39610

- Fix consistency by @Cyrilvallez in #39995

- Update expected output values after #39885 (part 1) by @ydshieh in #39990

- Fix int4 quantized model cannot work with cpu by @yuanwu2017 in #39724

- Fix missing video inputs for PerceptionLM. by @shuminghu in #39971

- fix: remove CHAT_TEMPLATE import in tests for deepseek-vl by @geetu040 in #40003

- Fix HGNetV2 Model Card and Image Classification Pipeline Usage Tips by @ducviet00 in #39965

- Fix default values of getenv by @cyyever in #39867

- FA2 can continue generation from cache by @zucchini-nlp in #39843

- unpin torch<2.8 on circleci by @ydshieh in #40012

- docs: fix duplication in 'en/optimizers.md' by @luckyvickyricky in #40014

- Raising error when quantizing a quantized model by @MekkCyber in #39998

- Update expected output values after #39885 (part 2) by @ydshieh in #40015

- pin torchcodec==0.5.0 for now with torch 2.7.1 on daily CI by @ydshieh in #40013

- Fix broken image inference for Fuyu model by @Isotr0py in #39915

- Higgs modules_to_not_convert standardization by @MekkCyber in #39989

- Fix an annoying flaky test by @zucchini-nlp in #40000

- Harmonize

past_key_valuetopast_key_valueSeverywhere by @Cyrilvallez in #39956 - Fix missing None default values for Gemma3n model in get_placeholder_mask by @Znerual in #39991)

- [core] Refactor the Cache logic to make it simpler and more general by @Cyrilvallez in #39797

- Tie weights recursively on all submodels by @Cyrilvallez in #39996

- Bnb failling tests by @MekkCyber in #40026

- fix

notification_service.pyabouttime_spentby @ydshieh in #40037 - Revert "fix

notification_service.pyabouttime_spent" by @ydshieh in #40044 - Update HuBERT model card according to template by @reedrya in #39742

- unpin

torchcodec==0.5.0and usetorch 2.8on daily CI by @ydshieh in #40072 - fix: resolve triton version check compatibility on windows by @Tsumugii24 in #39986

- [qwen-vl] fix beam search with videos by @zucchini-nlp in #39726

- [gemma3] update conversion key mapping by @zucchini-nlp in #39778

- fix: move super().init after vision_config init in Mistral3Config by @starcatmeow in #40063

- Remove deprecated cache-related objects by @Cyrilvallez in #40035

- guard on model.eval when using torch.compile + FSDP2 by @winglian in #37413

- Fix repo consistency by @zucchini-nlp in #40077

- added Textnet fast image processor by @rahzaazhar in #39884

- Fix

time_spentinnotification_service.py. by @ydshieh in #40081 - chore: standardize DeBERTa model card by @Shoumik-Gandre in #37409

- [

GPT Big Code] Fix attention scaling by @vasqu in #40041 - feat: extract rev in attn_implementation kernels via @ by @drbh in #40009

- Update notification service MI325 by @ivarflakstad in #40078

- Fix PerceptionLM image preprocessing for non-tiled image input. by @shuminghu in #40006

- Revert FA2 kwargs construction by @zucchini-nlp in #40029

- [fix] batch inference for llava_onevision by @cyr0930 in #40021

- [docs] Zero Shot Object Detection Task by @ariG23498 in #40096

- Update Glm4V processor and add tests by @zucchini-nlp in #39988

- Add glm4.5&&glm4.5V doc by @lambertwjh in #40095

- Causal loss for

ForConditionalGenerationby @qgallouedec in #39973 - Audio encodings now match conv2d weight dtype in Gemma3nAudioSSCPConvBlock by @Malav-P in #39743

- New DynamicSlidingWindowLayer & associated Cache by @Cyrilvallez in #40039

- Enable SIM rules by @cyyever in #39806

- feat: add

is_fastto ImageProcessor by @MilkClouds in #39603 - Re-apply make style by @Cyrilvallez in #40106

- Replace

logger.warningwithlogger.warning_onceinGradientCheckpointingLayerby @qgallouedec in #40091 - Fix regression in mllama vision encoder by @Isotr0py in #40083

- Switch the order of args in StaticCache (for BC and future logic) by @Cyrilvallez in #40100

- Fix Qwen3 MoE GGUF architecture mismatch by @ctcanbol in #39976

- Fix error on importing unavailable torch.distributed by @m-gallus in #40038

- [

Flash Attention] Fix flash attention integration by @vasqu in #40002 - [trainer] ensure special tokens in model configs are aligned with tokenizer at train time by @gante in #38441

- Fix Causality Handling in Flash Attention to Support Bidirectional Attention by @lucaswychan in #39707

- [docs] Add reference to HF-maintained

custom_generatecollections by @gante in #39894 - Add model card for MobileViT by @Shivamjan in #40033

- remove sequence parallel in llama4 by @3outeille in #40084

- 🌐 [i18n-KO] Translated

tiny_agents.mdto Korean by @AhnJoonSung in #39913 - [bugfix] Fix tensor device in Idefics2, Idefics3, and SmolVLM by @qgallouedec in #39975

- changed xLSTMRMSNorm to RMSNorm by @nikitazuevblago in #40113

- Fix QuantoQuantizedCache import issues by @manueldeprada in #40109

- [serve] allow array

contentinputs for LLMs by @gante in #39829 decoding_methodargument in generate by @manueldeprada in #40085- Collated reports by @ivarflakstad in #40080

- DOCS: Add missing space in SECURITY.md by @shivaheidari in #40087

- [trainer] handle case where EOS token is None in

generation_configby @gante in #40127 - Fix hidden torchvision>=0.15 dependency issue by @yonigozlan in #39928

- 🌐 [i18n-KO] Translated

main_classes/processors.mdto Korean by @TaskerJang in #39519 - 🌐 [i18n-KO] Translated

jamba.mdto Korean by @skwh54 in #39890 - 🌐 [i18n-KO] Translated

main_classes/optimizer_schedules.mdto Korean by @luckyvickyricky in #39713 - 🌐 [i18n-KO] Translated

gpt2.mdto Korean by @taemincode in #39808 - 🌐 [i18n-KO] Translated

optimizers.mdto Korean by @chelsseeey in #40011 - 🌐 [i18n-KO] Translated grounding-dino.md to Korean by @TaskerJang in #39861

- 🌐 [i18n-KO] Translated

pipelines.mdto Korean by @xhaktm00 in #39577 - gpt oss is important by @ArthurZucker in #40139

- Fix Janus by @Cyrilvallez in #40140

- [docs] Fix ko toctree by @stevhliu in #40138

- Remove an old badly designed test by @Cyrilvallez in #40142

- updated visualBERT modelcard by @Anil-Red in #40057

- 🌐 [i18n-KO] Translated

gemma3.mdto Korean by @seopp in #39865 - Fix quantized cache with only cache_implementation in generate by @Cyrilvallez in #40144

- Add pytest marker:

torch_compile_testandtorch_export_testby @ydshieh in #39950 - Update Dockerfiles to install packages inside a virtual environment by @Sai-Suraj-27 in #39098

- Create self-scheduled-amd-mi355-caller.yml by @glegendre01 in #40134

- [Cohere2Vision] remove unused arg by @zucchini-nlp in #40103

- [efficientloftr] fix bugs and follow original cross attn implementation strictly by @sbucaille in #40141

- Fix CI: Use correct import in SAM for torchvision InterpolationMode by @manueldeprada in #40160

- [Continous Batching] set head_dim when config.head_dim is None by @kashif in #40159

- Replace

self.tokenizerbyself.processing_classby @qgallouedec in #40119 - [FA2] Fix it finally - revert fa kwargs preparation by @Cyrilvallez in #40161

- [bugfix] fix flash-attention2 unavailable error for Ascend NPU by @FightingZhen in #40151

- build: Add fast image processor tvp by @adutchengineer in #39529

- Add GptOssForSequenceClassification for GPT-OSS models by @zyfedward in #40043

- Standardize BARTpho model card: badges, new examples, fixed broken im… by @eshwanthkartitr in #40051

- Add dates to the model docs by @MHRDYN7 in #39320

- Pin torch to 2.7.1 on CircleCI for now by @ydshieh in #40174

- Update dynamic attnt setter for multimodals by @zucchini-nlp in #39908

- [MINOR:TYPO] Update base.py by @cakiki in #40169

- make model doc device agnostic by @yao-matrix in #40143

- fix to avoid modifying a view in place by @3outeille in #40162

- Fix fsdp for generic-task models by @Cyrilvallez in #40191

- Add repr to EncoderDecoderCache by @Cyrilvallez in #40195

- Fix typos by @cyyever in #40175

- Remove _prepare_flash_attention_from_position_ids by @cyyever in #40069

- Avoid CUDA stream sync by @cyyever in #40060

- Fix various Pylint warnings by @cyyever in #40107

- Update: add type hints to check_tokenizers.py by @ajeet214 in #40094

- Benchmarking improvements by @ahadnagy in #39768

- docs: Update LayoutLM model card according to new standardized format by @Jin-HoMLee in #40129

- Revert "Pin torch to 2.7.1 on CircleCI for now" + Final fix for

too long with no outputby @ydshieh in #40201 - Use correct

model_input_namesfor PixtralImageProcessor by @rohitrango in #40226 - fix error vocab_size at Qwen2_5_VLForConditionalGeneration loss_function by @killight98 in #40130

- [SAM 2] Change checkpoints in docs and tests by @yonigozlan in #40213

- Fix more typos by @cyyever in #40212

- Fix ESM token_dropout crash when using inputs_embeds instead of input_ids by @notkisk in #40181

- AMD scheduled CI ref env file by @ivarflakstad in #40243

- Fix more pylint warnings by @cyyever in #40204

- remove transpose_for_scores call in ESM-2 by @pstjohn in #40210

- Add

chat_template(jinja2) as an extra dependency by @tboerstad in #40128 - [typing] fix type annotation error in DepthPro model image processor by @MengAiDev in #40238

- [serve] guard imports by @gante in #39825

- [

CI] Fix repo consistency by @vasqu in #40249 - Fixes for EncoderDecoderCache by @remi-or in #40008

- fix: Catch correct ConnectionError for additional_chat_templates by @akug in #39874

- Model card for NLLB by @sahil-kabir in #40074

- Correct typo and update notes in docs Readme by @PavloFesenko in #40234

- Fix benchmark workflow by @ahadnagy in #40254

- docs: Update OLMo model card by @rafakatri in #40233

- Skip broken tests by @zucchini-nlp in #40157

- Remove MI300 CI by @ivarflakstad in #40270

- set inputs_embeds to None while generate to avoid audio encoder forward in generation process by @BakerBunker in #40248

- [detection] fix attention mask for RT-DETR-based models by @materight in #40269

- Fix slow static cache export tests by @jackzhxng in #40261

- Fix setting attention for multimodal models by @zucchini-nlp in #39984

- [detection] fix correct

k_projweight and bias slicing in D-FINE by @notkisk in #40257 - Skipping pytree registration in case fsdp is enabled by @romitjain in #40075

- Update image_processing_perception_lm_fast.py to allow for proper override of vision_input_type by @tyleryzhu in #40252

- fix which routing method by @ArthurZucker in #40283

- Fix chat CLI GPU loading and request_id validation issues by @robin-ede in #40230)

- docs(layoutlm): add missing

id=usageto<hfoptions>tag in LayoutLM model card by @Jin-HoMLee in #40273 - Standardize RAG model card by @aayush226 in #40222

- docs: Update TrOCR model card to new format by @AceHunterr in #40240

- Update model card for gpt neox japanese by @ahnjj in #39862

- SmolVLM and InternVL: Ensure pixel values are converted to the correct dtype for fp16/bf16 by @qgallouedec in #40121

- Standardize BertGeneration model card by @nemitha2005 in #40250

- Adjust ROCm test output expectations by @ahadnagy in #40279

- SmolVLM test fixes by @ahadnagy in #40275

- make model docs device agnostic (2) by @yao-matrix in #40256

- [3/3] make docs device agnostic, all en docs for existing models done by @yao-matrix in #40298

- Allow to be able to run

torch.compiletests withfullgraph=Trueby @ydshieh in #40164 - [

FA] Fix dtype in varlen with position ids by @vasqu in #40295 - [docs] delete more TF/Flax docs by @gante in #40289

- Clean up X-Codec. by @ebezzam in #40271

- Remove OTel SDK dependencies by @anuraaga in #40305

- Fix GOT-OCR2 and Cohere2Vision image processor patches caculation by @Isotr0py in #40312

- [

fix] Pass adamw optimizer parameters to StableAdamW by @emapco in #40184 - chore: fix typo in

find_executable_batch_sizeto match new 0.9 ratio by @MilkClouds in #40206 - 🚨 [

Flash Attention] Fix sliding window size by @vasqu in #40163 - Remove unnecessary contiguous calls for modern torch by @Rocketknight1 in #40315

- Qwen2.5-Omni test fixes by @ahadnagy in #40307

- Add back

_tp_planattribute by @rishub-tamirisa in #39944 - byebye torch 2.1 by @Rocketknight1 in #40317

- No more

nattenby @ydshieh in #40287 - [

GPT OSS] Refactor the tests as it was not properly checking the outputs by @ArthurZucker in #40288 - Update CI with nightly torch workflow file by @ydshieh in #40306

- Fix: Apply

get_placeholder_maskin Ovis2 by @thisisiron in #40280 - Update notification service amd_daily_ci_workflows definition by @ivarflakstad in #40314

- One cache class to rule them all by @Cyrilvallez in #40276

- Fix chunked attention mask with left-padding by @Cyrilvallez in #40324

- [docs] remove flax references from

/en/model_docby @gante in #40311 - Fix qwen-omni processor text only mode by @yuekaizhang in #40336

- Change Qwen2RMSNorm to RMSNorm from PyTorch by @cyyever in #40066

- Add DeepseekV3ForSequenceClassification for Deepseek V3 models by @abdokaseb in #40200

- Fix deprecation warning version by @Cyrilvallez in #40343

- Add missing arguments to class constructors by @cyyever in #40068

- [docs] remove TF references from

/en/model_docby @gante in #40344 - Fix: Only call Trainer.align_special_tokens if model has "config" attribute by @tomaarsen in #40322

- add type hints by @wirthual in #40319

- Fix an infinite loop bug in recursive search of relative imports by @eladsegal in #40326

- Fix links in Glm4vMoe configuration classes to point to the correct H… by @vvvdwbvvv in #40310

- T5 test and target device fixes by @ahadnagy in #40313

- Update

test_spm_converter_bytefallback_warningby @ydshieh in #40284 - (small) fix conditional for input_ids and input_embeds in marian by @cyntqliu in #40045

- Fix attention vizualizer by @molbap in #40285

- [ModernBert] Prevent the attention mask from being None in ModernBertForSequenceClassification by @ashmikuz in #35991

- Clean up XCodec and other codecs by @ebezzam in #40348

- [serve] add cors warnings by @gante in #40112

- [detection] use consistent dtype for Conditional and DAB DETR positional embeddings by @agkphysics in #40300

- Remove more PyTorch 2.2 compatible code by @cyyever in #40337

- [

FA] Fix some model tests by @vasqu in #40350 - Qwen2.5-VL test fixes for ROCm by @ahadnagy in #40308

- [generate] handle support for cache classes when num enc layers != num dec layers by @gante in #40277

- [4/N]more docs to device agnostic by @yao-matrix in #40355

- DOCS: Clarification on the use of

label_namesas an argument to TrainingArguments by @huzaifa-jawad367 in #40353 - Fix idefics3 vision embeddings indices dtype by @Isotr0py in #40360

- wav2vec2 fixes by @remi-or in #40341

- Change multimodal data links to HF hub by @zucchini-nlp in #40309

- [pipelines] add support to

skip_special_tokensin the main text generation pipelines by @gante in #40356 ⚠️ ⚠️ Usedtypeinstead oftorch_dtypeeverywhere! by @Cyrilvallez in #39782- [processor] move commonalities to mixin by @zucchini-nlp in #40339

- [configuration] allow to overwrite kwargs from subconfigs by @zucchini-nlp in #40241

- fix(example): align parameter names with the latest function definition for gdino by @developer0hye in #40369

- Add GptOssForTokenClassification for GPT-OSS models by @abdokaseb in #40190

- Bug Fix: Dynamically set return_lse flag in FlexAttention by @amd-lalithnc in #40352

- Chat Template Doc Fixes by @Rocketknight1 in #40173

- Rework the Cache documentation by @Cyrilvallez in #40373

- Update README_zh-hans.md by @TardC in #40380

- HF papers in doc by @qgallouedec in #40381

- Run FA2 tests in CI by @ydshieh in #40397

- Reactivate a lot of tests skipped for no reason anymore by @Cyrilvallez in #40378

- 🧹 🧹 🧹 Get set decoder cleanup by @molbap in #39509

- fix to accept cumulative_seqlens from TransformersKwargs in FA by @Kurt232 in #40194

- [docs] flax/jax purge by @gante in #40372

- Fix typo: 'casual' -> 'causal' in code and documentation by @akintunero in #40371)

- Fix CI (hunyuan moe does not support fullgraph) by @Cyrilvallez in #40423

- Fix typo: 'seperator' to 'separator' in variable names by @Prawal-Sharma in #40389

- Fix UnboundLocalError in WER metric computation by @prxshetty in #40402

- Gpt oss optim by @jiqing-feng in #40304

- Fix processing tests by @zucchini-nlp in #40379

- Fix label smoothing incompatibility with multi-label classification by @avchauzov in #40296

- Fix modular for modernbert-decoder by @Cyrilvallez in #40431

- Update collated reports working directory and --path by @ivarflakstad in #40433

- Add

tokenizer_kwargsargument to the text generation pipeline by @Joshua-Chin in #40364 - [docs] remove last references to

transformersTF classes/methods by @gante in #40429 - Remove working-dir from collated reports job by @ivarflakstad in #40435

- 🌐 [i18n-KO] Translated

models.mdto Korean by @Judy-Choi in #39518 - Gemma3 text fixes: Add expectations for MI325 by @ahadnagy in #40384

- Fix collated reports model directory traversal by @ivarflakstad in #40437

- Fix #40292 by @id01 in #40439

- Fix collated reports uploading by @ivarflakstad in #40440

- InternVL MI325 test expectations by @ahadnagy in #40387

- Fix collated reports model name entry by @ivarflakstad in #40441

- Fix non FA2 tests after FA2 installed in CI docker image by @ydshieh in #40430

- Refactor ViT-like models by @qubvel in #39816

- [Trainer] accelerate contextparallel support in trainer by @kashif in #40205

- fix qwen25-vl grad acc by @iMountTai in #40333

- [video processors] decode only sampled videos -> less RAM and faster processing by @zucchini-nlp in #39600

- rename get_cuda_warm_up_factor to get_accelerator_warm_up_factor by @yao-matrix in #40363

- Make cache_config not mandatory by @remi-or in #40316

- Continuous batching refactor by @remi-or in #40426

- flash_paged: s_aux may not exist by @pcuenca in #40434

- Fix extra template loading by @Rocketknight1 in #40455

- deci gguf support by @ved1beta in #38669

- [fast_image_processor] fix image normalization for resize by @audioXD in #40436

- [RoPE] explicit factor > implicit factor in YaRN by @gante in #40320

- [pipeline] Add Keypoint Matching pipeline by @sbucaille in #39970

- Update SegFormer model card by @GSNCodes in #40417

- Not to shock AMD team by the cancelled workflow run notification ❤️ 💖 by @ydshieh in #40467

- Fix nightly torch CI by @ydshieh in #40469

- CI when PR merged to

mainby @ydshieh in #40451 - Validate GptOssConfig rope config after it's fully initialized by @zifeitong in #40474

- [modular] Use multi-processing + fix model import issue by @Cyrilvallez in #40481

- [modular] Remove ambiguity in all calls to parent class methods + fix dependency graph by @Cyrilvallez in #40456

- [ESM] support attention API by @zucchini-nlp in #40370

- [EfficientLoFTR] dynamic image size support by @sbucaille in #40329

- Fix

qwen2_moetests by @ydshieh in #40494 - [Whisper] Add rocm expected results to certain tests by @ivarflakstad in #40482

- Collated reports: no need to upload artifact by @ivarflakstad in #40502

- Fix the CI workflow of

merge to mainby @ydshieh in #40503 - docs(pixtral): Update Pixtral model card to new format by @BryanBradfo in #40442

- [modular] Classes can now be defined and referenced in arbitrary order (without bringing unwanted dependencies) by @Cyrilvallez in #40507

- Include machine type in collated reports filename by @ivarflakstad in #40514

Significant community contributions

The following contributors have made significant changes to the library over the last release:

- @remi-or

- @sbucaille

- [superglue] Fixed the way batch mask was applied to the scores before match assignment computation (#39968)

- [efficientloftr] fix bugs and follow original cross attn implementation strictly (#40141)

- [pipeline] Add Keypoint Matching pipeline (#39970)

- [EfficientLoFTR] dynamic image size support (#40329)

- @ducviet00

- @cyyever

- Fix default values of getenv (#39867)

- Enable SIM rules (#39806)

- Fix typos (#40175)

- Remove _prepare_flash_attention_from_position_ids (#40069)

- Avoid CUDA stream sync (#40060)

- Fix various Pylint warnings (#40107)

- Fix more typos (#40212)

- Fix more pylint warnings (#40204)

- Change Qwen2RMSNorm to RMSNorm from PyTorch (#40066)

- Add missing arguments to class constructors (#40068)

- Remove more PyTorch 2.2 compatible code (#40337)

- @zRzRzRzRzRzRzR

- GLM-4.5V Model Support (#39805)

- @SangbumChoi

- Add Segment Anything 2 (SAM2) (#32317)

- @adutchengineer

- build: Add fast image processor tvp (#39529)

- @MHRDYN7

- Add dates to the model docs (#39320)

- @yao-matrix

- @Manalelaidouni

- Add X-Codec model (#38248)

- @thisisiron

- @tic-top

- Add Kosmos-2.5 (#31711)

- @yjc9696

- HunYuan opensource (#39606)

- @Fazziekey

- Addiing ByteDance Seed Seed-OSS (#40272)